QFM013: Machine Intelligence Reading List April 2024

Everything that I found interesting last month about machines behaving intelligently.

Tags: qfm, machine, intelligence, reading, list, april, 2024

Photo by Amanda Dalbjörn on Unsplash

Photo by Amanda Dalbjörn on Unsplash

In this month’s edition of the Quantum Fax Machine’s Machine Intelligence Reading List, we challenge long-standing myths about computational limitations and explore how complexity theory shapes machine behaviour. What Computers Cannot Do: The Consequences of Turing-Completeness dissects the inherent limitations of computers through the lens of the Halting Problem and Turing-completeness, offering crucial insights into the boundaries that many programmers often overlook.

We also look at the practical side of machine intelligence with Replicate.com which provides a comprehensive platform for deploying and scaling AI models. This tool simplifies the use of open-source AI, offering an accessible way for businesses to integrate machine intelligence into their workflows. Equally practically, Outset.ai uses a variety of generative AI techniques to help with the synthesis of video, audio, and text conversations.

In the broader landscape of AI agent architectures, The Landscape of Emerging AI Agent Architectures for Reasoning, Planning, and Tool Calling: A Survey offers a meticulous overview of emerging methods that bolster reasoning and planning capabilities, emphasising the importance of advanced architectures in making machines more adept at problem-solving. Continuing the exploratory theme of agentic behaviour, An Agentic Design for AI Consciousness explores (speculatively) how agent-based architectures might help LLMs move towards a more human-like level of consciousness.

For those wishing to understand how LLMs work, Transformer Math 101 dives in to explain LLMs from a mathematical perspective. 3Blue1Brown: Neural Networks covers similar topics in a fantastic set of from-the-ground-up explanatory videos. And if you just want a TED talk style overview of what might be coming next, then What Is an AI Anyway? by Mustafa Suleyman is a great place to start your learning journey.

If you are a gen-AI sceptic, check out Looking for AI Use Cases and Generative AI is still a solution in search of a problem which question what, if any, use cases have genuine value now and into the future.

And many more!

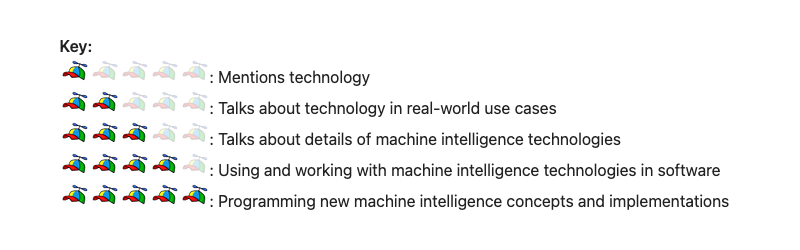

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

Source: Photo by Amanda Dalbjörn on Unsplash

What Computers Cannot Do: The Consequences of Turing-Completeness: This article debunks the common misconception among programmers regarding the limitations and capabilities of computers by diving deep into the Halting Problem, Turing-completeness, and the consequences of universal Turing machines (UTMs). The author leverages his learning journey and experiences to highlight the hard and soft limits of computational abilities, emphasizing the significance of understanding what computers cannot do, which many programmers overlook. The insights are grounded in historical context, mathematical proofs, and practical implications, making a compelling case for the necessity of this fundamental programming knowledge.)

What Computers Cannot Do: The Consequences of Turing-Completeness: This article debunks the common misconception among programmers regarding the limitations and capabilities of computers by diving deep into the Halting Problem, Turing-completeness, and the consequences of universal Turing machines (UTMs). The author leverages his learning journey and experiences to highlight the hard and soft limits of computational abilities, emphasizing the significance of understanding what computers cannot do, which many programmers overlook. The insights are grounded in historical context, mathematical proofs, and practical implications, making a compelling case for the necessity of this fundamental programming knowledge.)

#Tech #Programming #TuringMachines #Computability #HaltingProblem

Replicate.com: Replicate provides a user-friendly API platform to run, fine-tune, and deploy open-source AI models for generating images, text, music, videos, and more, helping businesses build and scale AI products easily. The platform offers flexibility with automatic scaling, efficient infrastructure, and customisable pricing.

Replicate.com: Replicate provides a user-friendly API platform to run, fine-tune, and deploy open-source AI models for generating images, text, music, videos, and more, helping businesses build and scale AI products easily. The platform offers flexibility with automatic scaling, efficient infrastructure, and customisable pricing.

#AI #MachineLearning #API #OpenSource #ReplicateAI

The Landscape of Emerging AI Agent Architectures for Reasoning, Planning, and Tool Calling: A Survey: Cornell University and the Simons Foundation support a platform recognised for contributing to and facilitating academic research. This platform allows users to search for scientific articles across numerous fields, highlighting its commitment to making research accessible. The website features tools for users to search articles by title, author, abstract, and more, and also emphasizes the importance of donations and institutional support to sustain its operations.

The Landscape of Emerging AI Agent Architectures for Reasoning, Planning, and Tool Calling: A Survey: Cornell University and the Simons Foundation support a platform recognised for contributing to and facilitating academic research. This platform allows users to search for scientific articles across numerous fields, highlighting its commitment to making research accessible. The website features tools for users to search articles by title, author, abstract, and more, and also emphasizes the importance of donations and institutional support to sustain its operations.

#CornellUniversity #SimonsFoundation #AcademicResearch #ScientificArticles #ResearchAccessibility

This prompting technique is insanely useful: This set of tweets presents a very simple overview of a simple LLM prompting technique that can be used to improve prompt efficiency.

This prompting technique is insanely useful: This set of tweets presents a very simple overview of a simple LLM prompting technique that can be used to improve prompt efficiency.

#PromptEngineering #LLM #Hack #Level #Tweet

Transformer Math 101: “Transformer Math 101” by Quentin Anthony, Stella Biderman, and Hailey Schoelkopf presents an overview of the fundamental mathematics guiding the computation and memory usage of transformer models. It highlights how simple equations can be utilised to calculate the training costs, primarily influenced by VRAM requirements, and provides insights into the compute and memory optimisations for efficient training. The post serves as a valuable resource for understanding the scaling laws by OpenAI and DeepMind, and discusses strategies such as mixed-precision training and sharded optimization to manage resource requirements effectively.)

Transformer Math 101: “Transformer Math 101” by Quentin Anthony, Stella Biderman, and Hailey Schoelkopf presents an overview of the fundamental mathematics guiding the computation and memory usage of transformer models. It highlights how simple equations can be utilised to calculate the training costs, primarily influenced by VRAM requirements, and provides insights into the compute and memory optimisations for efficient training. The post serves as a valuable resource for understanding the scaling laws by OpenAI and DeepMind, and discusses strategies such as mixed-precision training and sharded optimization to manage resource requirements effectively.)

#transformers #NLP #machinelearning #scalinglaws #mixedprecision

Outset.ai: Revolutionizing User Surveys with GPT-4: Outset.ai is revolutionizing user surveys by harnessing the power of GPT-4, creating a more engaging and insightful survey experience. This platform has recently secured a $3.8M seed investment, highlighting its innovative approach to data collection and analysis. By combining the scalability of surveys with the depth of personal interviews, Outset.ai offers unparalleled insights into customer preferences and behaviors, facilitated through AI-moderated conversations.)

Outset.ai: Revolutionizing User Surveys with GPT-4: Outset.ai is revolutionizing user surveys by harnessing the power of GPT-4, creating a more engaging and insightful survey experience. This platform has recently secured a $3.8M seed investment, highlighting its innovative approach to data collection and analysis. By combining the scalability of surveys with the depth of personal interviews, Outset.ai offers unparalleled insights into customer preferences and behaviors, facilitated through AI-moderated conversations.)

#OutsetAI #GPT4 #UserSurveys #TechInnovation #SeedInvestment

CoreNet: A Library for Training Deep Neural Networks: CoreNet is a comprehensive library for training both conventional and novel deep neural network models across a wide range of tasks such as foundation models (including CLIP and LLM), object classification, detection, and semantic segmentation. It facilitates research and engineering efforts by providing training recipes, pre-trained model weights, and efficient execution on Apple Silicon through MLX examples. The initial release includes features like OpenELM, CatLIP, and several MLX examples for improved efficiency.)

CoreNet: A Library for Training Deep Neural Networks: CoreNet is a comprehensive library for training both conventional and novel deep neural network models across a wide range of tasks such as foundation models (including CLIP and LLM), object classification, detection, and semantic segmentation. It facilitates research and engineering efforts by providing training recipes, pre-trained model weights, and efficient execution on Apple Silicon through MLX examples. The initial release includes features like OpenELM, CatLIP, and several MLX examples for improved efficiency.)

#CoreNet #DeepLearning #FoundationModels #ObjectDetection #AppleSilicon

Anthropic’s Prompt Engineering Interactive Tutorial: Anthropic has made an interactive public access tutorial focused on prompt engineering available through a Google Spreadsheet. Users need an API key for full interaction but can alternatively view a static tutorial answer key. The tutorial guides through various steps such as making a copy to a personal Google Drive, installing Claude for Sheets extension, enabling the extension on the document, and adding an Anthropic API key. It also covers usage notes, starting tips, and how to navigate through pages.

Anthropic’s Prompt Engineering Interactive Tutorial: Anthropic has made an interactive public access tutorial focused on prompt engineering available through a Google Spreadsheet. Users need an API key for full interaction but can alternatively view a static tutorial answer key. The tutorial guides through various steps such as making a copy to a personal Google Drive, installing Claude for Sheets extension, enabling the extension on the document, and adding an Anthropic API key. It also covers usage notes, starting tips, and how to navigate through pages.

#Anthropic #PromptEngineering #InteractiveTutorial #GoogleSheets #APIKey

Awesome Code AI: This is a compilation of AI coding tools that focuses on automation, security, code completion, and AI assistance for developers. The project aims to list tools that assist with code generation, completion, and refactoring to enhance developer productivity.

Awesome Code AI: This is a compilation of AI coding tools that focuses on automation, security, code completion, and AI assistance for developers. The project aims to list tools that assist with code generation, completion, and refactoring to enhance developer productivity.

#GitHub #AI #CodingTools #DeveloperProductivity #OpenSource

The Death of the Big 4: AI-Enabled Services Are Opening a Whole New Market: The article discusses the emergence of AI-enabled services as a significant shift in the market, challenging the dominance of traditional services firms like the Big 4. Advances in AI technology and the availability of venture capital are enabling new companies to offer more efficient and innovative services. These AI-driven firms are potentially more scalable and can deliver higher value to clients by automating tasks and leveraging human talent more effectively. The potential for these companies is vast, given the size of the services industry and the opportunity for disruption.

The Death of the Big 4: AI-Enabled Services Are Opening a Whole New Market: The article discusses the emergence of AI-enabled services as a significant shift in the market, challenging the dominance of traditional services firms like the Big 4. Advances in AI technology and the availability of venture capital are enabling new companies to offer more efficient and innovative services. These AI-driven firms are potentially more scalable and can deliver higher value to clients by automating tasks and leveraging human talent more effectively. The potential for these companies is vast, given the size of the services industry and the opportunity for disruption.

#AI #VentureCapital #MarketDisruption #ServicesIndustry #Innovation

RAFT: A new way to teach LLMs to be better at RAG: This article introduces RAFT (Retrieval-Augmented Fine-Tuning), a technique aimed at enhancing the learning capacities of Large Language Models (LLMs) through a blend of retrieval-augmented generation and fine-tuning. Authored by Cedric Vidal and Suraj Subramanian, RAFT is presented as a new strategy for domain-specific adaptation of LLMs, overcoming the limitations of existing methods by pre-adapting models to domain knowledge before application. Demonstrated through their research at UC Berkeley, utilising Meta Llama 2 and Azure AI Studio, RAFT promises better performance for LLMs in domain-specific tasks, leveraging both pre-existing documents and fine-tuned domain knowledge for improved context and answer generation in LLM queries.

RAFT: A new way to teach LLMs to be better at RAG: This article introduces RAFT (Retrieval-Augmented Fine-Tuning), a technique aimed at enhancing the learning capacities of Large Language Models (LLMs) through a blend of retrieval-augmented generation and fine-tuning. Authored by Cedric Vidal and Suraj Subramanian, RAFT is presented as a new strategy for domain-specific adaptation of LLMs, overcoming the limitations of existing methods by pre-adapting models to domain knowledge before application. Demonstrated through their research at UC Berkeley, utilising Meta Llama 2 and Azure AI Studio, RAFT promises better performance for LLMs in domain-specific tasks, leveraging both pre-existing documents and fine-tuned domain knowledge for improved context and answer generation in LLM queries.

#AI #RAFT #LargeLanguageModels #DomainAdaptation #MachineLearning

The Pipe: The Pipe is a multimodal tool that streamlines the process of feeding various data types, such as PDFs, URLs, slides, YouTube videos, and more, into vision-language models like GPT-4V. It’s designed for LLM and RAG applications requiring both textual and visual understanding across a wide array of sources. Available as a hosted API or for local setup, The Pipe extracts text and visuals, optimizing them for multimodal models. It supports an extensive list of file types, including complex PDFs, web pages, codebases, and git repos, ensuring comprehensive content extraction.

The Pipe: The Pipe is a multimodal tool that streamlines the process of feeding various data types, such as PDFs, URLs, slides, YouTube videos, and more, into vision-language models like GPT-4V. It’s designed for LLM and RAG applications requiring both textual and visual understanding across a wide array of sources. Available as a hosted API or for local setup, The Pipe extracts text and visuals, optimizing them for multimodal models. It supports an extensive list of file types, including complex PDFs, web pages, codebases, and git repos, ensuring comprehensive content extraction.

#ThePipe #GPT4V #MultimodalTool #DataExtraction #VisionLanguageModels

OpenAPI AutoSpec: OpenAPI AutoSpec is designed to automatically generate accurate OpenAPI specifications for any local website or service in real-time. By acting as a local server proxy, it captures HTTP traffic and documents API behaviors, allowing for the dynamic creation of OpenAPI 3.0 specifications. This solution facilitates easier sharing and understanding of APIs by documenting requests, responses, and the interactions between different server components without requiring manual input or extensive setup. Key features include real-time documentation, ease of exporting specifications, and support for fine-tuning documentation through host filtering and path parameterisation.

OpenAPI AutoSpec: OpenAPI AutoSpec is designed to automatically generate accurate OpenAPI specifications for any local website or service in real-time. By acting as a local server proxy, it captures HTTP traffic and documents API behaviors, allowing for the dynamic creation of OpenAPI 3.0 specifications. This solution facilitates easier sharing and understanding of APIs by documenting requests, responses, and the interactions between different server components without requiring manual input or extensive setup. Key features include real-time documentation, ease of exporting specifications, and support for fine-tuning documentation through host filtering and path parameterisation.

#OpenAPIAutoSpec #APIDocumentation #OpenAPISpecification #RealTimeAPIDocs #AutomaticSpecificationGeneration

OpenAI Create Batch: This snippet of code is primarily a loading screen from a website, incorporating graphical elements like SVG logos and animations such as spinning bubbles, all while prompting the user to enable JavaScript and cookies for the website to function properly. It showcases web development techniques for creating interactive and dynamic user experiences, emphasizing the need for client-side scripting and browser cookie support.

OpenAI Create Batch: This snippet of code is primarily a loading screen from a website, incorporating graphical elements like SVG logos and animations such as spinning bubbles, all while prompting the user to enable JavaScript and cookies for the website to function properly. It showcases web development techniques for creating interactive and dynamic user experiences, emphasizing the need for client-side scripting and browser cookie support.

#WebDevelopment #JavaScript #Cookies #UserExperience #InteractiveDesign

LLM in a flash: Efficient Large Language Model Inference with Limited Memory: The paper presents a solution for efficiently running large language models (LLMs) that exceed available DRAM capacity by storing model parameters in flash memory and loading them into DRAM on demand. It introduces two key techniques: “windowing,” which minimises data transfer by reusing neurons, and “row-column bundling,” which leverages flash memory’s strengths by reading larger contiguous data chunks. These methods enhance inference speed and enable models up to twice the size of available DRAM to run effectively on devices with limited memory.

LLM in a flash: Efficient Large Language Model Inference with Limited Memory: The paper presents a solution for efficiently running large language models (LLMs) that exceed available DRAM capacity by storing model parameters in flash memory and loading them into DRAM on demand. It introduces two key techniques: “windowing,” which minimises data transfer by reusing neurons, and “row-column bundling,” which leverages flash memory’s strengths by reading larger contiguous data chunks. These methods enhance inference speed and enable models up to twice the size of available DRAM to run effectively on devices with limited memory.

#LLMOptimization #AIResearch #EfficientInference #FlashMemory #NaturalLanguageProcessing

What Is an AI Anyway? | Mustafa Suleyman | TED: In a thought-provoking TED talk, Mustafa Suleyman dives into the complex world of artificial intelligence, questioning the nature of AI and its implications for society. Even the experts developing AI technologies find it challenging to predict its trajectory, framing AI development as an exploration into the unknown. This video shines a light on the philosophical and technological questions surrounding AI, pushing viewers to reconsider what it means to create intelligent systems.

What Is an AI Anyway? | Mustafa Suleyman | TED: In a thought-provoking TED talk, Mustafa Suleyman dives into the complex world of artificial intelligence, questioning the nature of AI and its implications for society. Even the experts developing AI technologies find it challenging to predict its trajectory, framing AI development as an exploration into the unknown. This video shines a light on the philosophical and technological questions surrounding AI, pushing viewers to reconsider what it means to create intelligent systems.

#TED #AI #ArtificialIntelligence #Technology #Future

Full Steam Ahead: The 2024 MAD (Machine Learning, AI & Data) Landscape: The 2024 MAD (Machine Learning, AI & Data) landscape overview marks its tenth annual release, capturing the current state and evolution of the data, analytics, machine learning, and AI ecosystem. With over 2,011 companies featured, this year’s landscape illustrates significant growth and the ongoing fusion of ML, AI, and data, highlighting the shift from niche to mainstream. The article explores various emerging trends, significant industry shifts, and the role of technological advancements in shaping the future of the ecosystem.

Full Steam Ahead: The 2024 MAD (Machine Learning, AI & Data) Landscape: The 2024 MAD (Machine Learning, AI & Data) landscape overview marks its tenth annual release, capturing the current state and evolution of the data, analytics, machine learning, and AI ecosystem. With over 2,011 companies featured, this year’s landscape illustrates significant growth and the ongoing fusion of ML, AI, and data, highlighting the shift from niche to mainstream. The article explores various emerging trends, significant industry shifts, and the role of technological advancements in shaping the future of the ecosystem.

#MachineLearning #ArtificialIntelligence #DataAnalytics #TechTrends2024 #MADLandscape

Natural language instructions induce compositional generalization in networks of neurons: This study leverages natural language processing advancements to model the human ability to understand instructions for novel tasks and to verbally describe a learned task. Neural network models, particularly RNNs augmented with pretrained language models like SBERT, demonstrated the capability to interpret and generate natural language instructions, thus allowing these networks to generalise learning across tasks with 83% accuracy. This modeling approach predicts neural representations that might be observed in human brains when integrating linguistic and sensorimotor skills. It also highlights how language can structure knowledge in the brain, facilitating general cognition.

Natural language instructions induce compositional generalization in networks of neurons: This study leverages natural language processing advancements to model the human ability to understand instructions for novel tasks and to verbally describe a learned task. Neural network models, particularly RNNs augmented with pretrained language models like SBERT, demonstrated the capability to interpret and generate natural language instructions, thus allowing these networks to generalise learning across tasks with 83% accuracy. This modeling approach predicts neural representations that might be observed in human brains when integrating linguistic and sensorimotor skills. It also highlights how language can structure knowledge in the brain, facilitating general cognition.

#NaturalLanguageProcessing #NeuralNetworks #CognitiveScience #LanguageLearning #Neuroscience

CometLLM: Logging and Visualizing LLM Prompts: CometLLM is designed for logging, tracking, and visualising Large Language Model (LLM) prompts and chains. With features like automatic prompt tracking for OpenAI chat models, visualisation of prompt and response strategies, chain execution logging for detailed analysis, user feedback tracking, and prompt differentiation in the UI, CometLLM aims to streamline LLM workflows, enhance problem-solving, and ensure reproducible results. It also highlights the ongoing development and community engagement through its open-source MIT license, numerous contributors, and consistent release updates, showcasing a platform committed to evolving with the LLM landscape.

CometLLM: Logging and Visualizing LLM Prompts: CometLLM is designed for logging, tracking, and visualising Large Language Model (LLM) prompts and chains. With features like automatic prompt tracking for OpenAI chat models, visualisation of prompt and response strategies, chain execution logging for detailed analysis, user feedback tracking, and prompt differentiation in the UI, CometLLM aims to streamline LLM workflows, enhance problem-solving, and ensure reproducible results. It also highlights the ongoing development and community engagement through its open-source MIT license, numerous contributors, and consistent release updates, showcasing a platform committed to evolving with the LLM landscape.

#LLM #CometLLM #AI #OpenAI #MachineLearning

GR00T: NVIDIA’s moonshot to solve embedded AI: Project GR00T aims to build a versatile foundation model enabling humanoid robots to learn from multimodal instructions and perform diverse tasks. Leveraging NVIDIA’s technology stack, it’s set to redefine humanoid learning through simulation, training, and deployment, while supporting a global collaborative ecosystem.

GR00T: NVIDIA’s moonshot to solve embedded AI: Project GR00T aims to build a versatile foundation model enabling humanoid robots to learn from multimodal instructions and perform diverse tasks. Leveraging NVIDIA’s technology stack, it’s set to redefine humanoid learning through simulation, training, and deployment, while supporting a global collaborative ecosystem.

#UserEngagement #CookieConsent #DigitalPrivacy #UserAgreement #WebAccessibility

Making Deep Learning Go Brrrr From First Principles: This guide discusses optimising deep learning performance by understanding and acting upon the three main components involved in running models: compute, memory bandwidth, and overhead. It explains the importance of identifying whether a model is compute-bound, memory-bandwidth-bound, or overhead-bound, offering specific strategies for each case, such as maximising GPU utilisation, operator fusion, and minimising Python overhead. The post emphasises the power of first principles reasoning in making deep learning systems more efficient and the role of specialised hardware in enhancing compute capabilities.

Making Deep Learning Go Brrrr From First Principles: This guide discusses optimising deep learning performance by understanding and acting upon the three main components involved in running models: compute, memory bandwidth, and overhead. It explains the importance of identifying whether a model is compute-bound, memory-bandwidth-bound, or overhead-bound, offering specific strategies for each case, such as maximising GPU utilisation, operator fusion, and minimising Python overhead. The post emphasises the power of first principles reasoning in making deep learning systems more efficient and the role of specialised hardware in enhancing compute capabilities.

#DeepLearning #PerformanceOptimization #GPUUtilization #OperatorFusion #PythonOverhead

Thoughts on the Future of Software Development: The article discusses the evolving landscape of software development, highlighting the impact of Large Language Models (LLMs) on creative and coding tasks. It challenges the notion that machines cannot think creatively, illustrating a shift towards more sophisticated AI capabilities in software development. The piece delves into various aspects of software development beyond coding, like gathering requirements and collaborating with teams, and suggests a future where AI could take on more of these tasks. It also presents frameworks for understanding AI’s current and potential roles in software development, emphasising the importance of human oversight and the unlikely replacement of software developers by AI in the near future.

Thoughts on the Future of Software Development: The article discusses the evolving landscape of software development, highlighting the impact of Large Language Models (LLMs) on creative and coding tasks. It challenges the notion that machines cannot think creatively, illustrating a shift towards more sophisticated AI capabilities in software development. The piece delves into various aspects of software development beyond coding, like gathering requirements and collaborating with teams, and suggests a future where AI could take on more of these tasks. It also presents frameworks for understanding AI’s current and potential roles in software development, emphasising the importance of human oversight and the unlikely replacement of software developers by AI in the near future.

#SoftwareDevelopment #AI #LLMs #Coding #Technology

Dify: Dify is an open-source LLM app development platform designed to streamline the transition from prototypes to production-ready AI applications. It boasts a user-friendly interface that amalgamates AI workflows, RAG pipelines, model management, agent capabilities, among other features. With support for numerous proprietary and open-source LLMs, Dify caters to a broad spectrum of development needs, offering tools for prompt crafting, model comparison, and app monitoring. Key offerings include comprehensive model support, RAG engines, and backend services, supplemented by enterprise features for enhanced access control and deployment flexibility.

Dify: Dify is an open-source LLM app development platform designed to streamline the transition from prototypes to production-ready AI applications. It boasts a user-friendly interface that amalgamates AI workflows, RAG pipelines, model management, agent capabilities, among other features. With support for numerous proprietary and open-source LLMs, Dify caters to a broad spectrum of development needs, offering tools for prompt crafting, model comparison, and app monitoring. Key offerings include comprehensive model support, RAG engines, and backend services, supplemented by enterprise features for enhanced access control and deployment flexibility.

#AI #OpenSource #LLM #AppDevelopment #Dify

LLM inference speed of light: This post delves into the theoretical limits of language model inference speed, using ‘calm’, a fast CUDA implementation for transformer-based language model inference, to highlight how the process is inherently bandwidth-limited due to the nature of matrix-vector multiplication and attention computation. It details the separation between ALU (Arithmetic Logic Unit) capabilities and memory bandwidth, showing numerical examples with modern CPUs and GPUs to illustrate why language model inference cannot exceed certain speeds. The piece also explores the potential for optimization through hardware and software improvements, discussing how tactics like speculative decoding and batching can enhance ALU utilisation and inference efficiency.

LLM inference speed of light: This post delves into the theoretical limits of language model inference speed, using ‘calm’, a fast CUDA implementation for transformer-based language model inference, to highlight how the process is inherently bandwidth-limited due to the nature of matrix-vector multiplication and attention computation. It details the separation between ALU (Arithmetic Logic Unit) capabilities and memory bandwidth, showing numerical examples with modern CPUs and GPUs to illustrate why language model inference cannot exceed certain speeds. The piece also explores the potential for optimization through hardware and software improvements, discussing how tactics like speculative decoding and batching can enhance ALU utilisation and inference efficiency.

#LanguageModel #InferenceSpeed #CUDA #ALUOptimization #BandwidthLimitation

Stanford AI Index Report 2024: An In-depth Analysis of AI’s Current State: The Stanford AI Index Report 2024’s seventh edition, highlighting the most comprehensive analysis to date, indicates AI’s ever-growing influence on society. It covers AI’s technical advancements, societal perceptions, and geopolitical dynamics, providing new data on AI training costs, responsible AI, and AI’s impact on science and medicine. This edition marks a crucial point, showing significant progress and challenges in AI deployment and its implications across various sectors.

Stanford AI Index Report 2024: An In-depth Analysis of AI’s Current State: The Stanford AI Index Report 2024’s seventh edition, highlighting the most comprehensive analysis to date, indicates AI’s ever-growing influence on society. It covers AI’s technical advancements, societal perceptions, and geopolitical dynamics, providing new data on AI training costs, responsible AI, and AI’s impact on science and medicine. This edition marks a crucial point, showing significant progress and challenges in AI deployment and its implications across various sectors.

#AIIndexReport #AIAdvancements #TechnicalAI #ResponsibleAI #AIinScienceAndMedicine

The Question No LLM Can Answer: The article discusses the inability of Large Language Models (LLMs) like GPT-4 and Llama 3 to correctly answer specific questions, such as identifying a particular episode of ‘Gilligan’s Island’ that involves mind reading. Despite being trained on vast amounts of data, these models either hallucinate answers or fail to find the correct one, highlighting a fundamental limitation in how LLMs understand and process information. The phenomenon of AI models favouring the number ‘42’ when asked to choose a number between 1 and 100 is also explored, suggesting biases introduced during training.

The Question No LLM Can Answer: The article discusses the inability of Large Language Models (LLMs) like GPT-4 and Llama 3 to correctly answer specific questions, such as identifying a particular episode of ‘Gilligan’s Island’ that involves mind reading. Despite being trained on vast amounts of data, these models either hallucinate answers or fail to find the correct one, highlighting a fundamental limitation in how LLMs understand and process information. The phenomenon of AI models favouring the number ‘42’ when asked to choose a number between 1 and 100 is also explored, suggesting biases introduced during training.

#AI #LLMs #Technology #KnowledgeLimitations #DataBias

VASA-1: Lifelike Audio-Driven Talking Faces Generated in Real Time: Microsoft Research introduced VASA-1, a framework capable of generating lifelike talking faces of virtual characters using a single static image and audio speech. VASA-1 masterfully synchronises lip movements with audio, captures facial nuances, and produces natural head motions, offering a perception of realism and liveliness. Key innovations include a holistic model for facial dynamics and head movement in face latent space, developed using videos. This method substantially outperforms existing ones in various metrics, enabling real-time generation of high-quality, realistic talking face videos.

VASA-1: Lifelike Audio-Driven Talking Faces Generated in Real Time: Microsoft Research introduced VASA-1, a framework capable of generating lifelike talking faces of virtual characters using a single static image and audio speech. VASA-1 masterfully synchronises lip movements with audio, captures facial nuances, and produces natural head motions, offering a perception of realism and liveliness. Key innovations include a holistic model for facial dynamics and head movement in face latent space, developed using videos. This method substantially outperforms existing ones in various metrics, enabling real-time generation of high-quality, realistic talking face videos.

#MicrosoftResearch #VASA1 #AI #TechnologyInnovation #VirtualAvatars

An Agentic Design for AI Consciousness: Chris Sim explores the next frontier in AI - the development of a conscious AI system. By rejecting dualism for a naturalist view, the article delves into creating AI consciousness through a blend of designed and emergent systems. This ambitious approach outlines the construction of an AI mind with subconscious and conscious processes, stigmergic communication, and the integration of large language models (LLMs) as the foundation for cognitive functions. The blueprint proposes a system where AI can think, learn, adapt, and potentially attain consciousness, a milestone that remains vastly exploratory and theoretical in the current technological landscape.

An Agentic Design for AI Consciousness: Chris Sim explores the next frontier in AI - the development of a conscious AI system. By rejecting dualism for a naturalist view, the article delves into creating AI consciousness through a blend of designed and emergent systems. This ambitious approach outlines the construction of an AI mind with subconscious and conscious processes, stigmergic communication, and the integration of large language models (LLMs) as the foundation for cognitive functions. The blueprint proposes a system where AI can think, learn, adapt, and potentially attain consciousness, a milestone that remains vastly exploratory and theoretical in the current technological landscape.

#AIConsciousness #GenerativeAI #NaturalistView #Stigmergy #TechnologyAdvancement

How (Specifically) AI Will 100x Human Creativity and Output: Daniel Miessler discusses the true potential of AI in exponentially increasing human creativity and output, not by solving imagined problems, but by tackling real issues of execution, scale, and barriers. He illustrates how AI can attend to tasks beyond human capacity and access, essentially providing an ‘army’ of support that could unlock untold human potential. This exploration emphasizes that the real limitation isn’t human creativity or intelligence, but the practical ability to enact ideas on a vast scale.

How (Specifically) AI Will 100x Human Creativity and Output: Daniel Miessler discusses the true potential of AI in exponentially increasing human creativity and output, not by solving imagined problems, but by tackling real issues of execution, scale, and barriers. He illustrates how AI can attend to tasks beyond human capacity and access, essentially providing an ‘army’ of support that could unlock untold human potential. This exploration emphasizes that the real limitation isn’t human creativity or intelligence, but the practical ability to enact ideas on a vast scale.

#AI #HumanCreativity #TechInsights #FutureOfWork #DanielMiessler

Enhancing GPT’s Response Accuracy Through Embeddings-Based Search: This comprehensive guide explores the method of using GPT for question answering on topics beyond its training data, via a two-step Search-Ask approach. Initially, relevant textual content is identified using embedding-based search. Subsequently, these sections are presented to GPT, seeking answers to the posed queries. This process leverages embeddings to locate pertinent sections within text libraries that likely contain answers, despite not having direct phrasing matches with the inquiry. A notable advantage discussed is GPT’s enhanced accuracy in providing answers due to the contextual clues supplied by the inserted texts, compared to fine-tuning methods that are less reliable for factual information.

Enhancing GPT’s Response Accuracy Through Embeddings-Based Search: This comprehensive guide explores the method of using GPT for question answering on topics beyond its training data, via a two-step Search-Ask approach. Initially, relevant textual content is identified using embedding-based search. Subsequently, these sections are presented to GPT, seeking answers to the posed queries. This process leverages embeddings to locate pertinent sections within text libraries that likely contain answers, despite not having direct phrasing matches with the inquiry. A notable advantage discussed is GPT’s enhanced accuracy in providing answers due to the contextual clues supplied by the inserted texts, compared to fine-tuning methods that are less reliable for factual information.

#GPT #OpenAI #Embeddings #QuestionAnswering #Technology

Comprehensive Guide to AI Tools and Resources: This is a comprehensive guide to various AI tools and resources, categorized into sections such as AI models and infrastructures, developer tools, model development platforms, and more. Notable mentions include LetsBuild.AI, a community-driven platform for AI enthusiasts, and various tools and libraries for enhancing AI development, like Hugging Face for collaborative model development, TensorFlow, and PyTorch for deep learning, and unique tools such as Ollama for running large language models locally. The guide also highlights platforms for vector databases, command-line tools, and resources for AI model monitoring and packaging, aiming to serve as a roadmap for developers and researchers interested in AI technologies.

Comprehensive Guide to AI Tools and Resources: This is a comprehensive guide to various AI tools and resources, categorized into sections such as AI models and infrastructures, developer tools, model development platforms, and more. Notable mentions include LetsBuild.AI, a community-driven platform for AI enthusiasts, and various tools and libraries for enhancing AI development, like Hugging Face for collaborative model development, TensorFlow, and PyTorch for deep learning, and unique tools such as Ollama for running large language models locally. The guide also highlights platforms for vector databases, command-line tools, and resources for AI model monitoring and packaging, aiming to serve as a roadmap for developers and researchers interested in AI technologies.

#AI #DeveloperTools #MachineLearning #DeepLearning #Technology

3Blue1Brown: Neural Networks: This deep dive goes into neural networks and machine learning through a series of beautifully animated lessons. These lessons cover basic concepts like what neural networks are and how they learn, using gradient descent and backpropagation, to more advanced topics such as GPT models and attention mechanisms within transformers. It’s a resource for both beginners keen to understand the math behind these concepts and seasoned professionals looking for a refresher.

3Blue1Brown: Neural Networks: This deep dive goes into neural networks and machine learning through a series of beautifully animated lessons. These lessons cover basic concepts like what neural networks are and how they learn, using gradient descent and backpropagation, to more advanced topics such as GPT models and attention mechanisms within transformers. It’s a resource for both beginners keen to understand the math behind these concepts and seasoned professionals looking for a refresher.

#3Blue1Brown #NeuralNetworks #MachineLearning #GradientDescent #Backpropagation

Bland.ai Turbo: Bland.AI introduces Turbo, showcasing the fastest conversational AI to date, promising sub-second response times to mimic human speed and interaction quality. The site offers visitors the chance to experience this rapid AI first hand through instant calls, urging users to try it for free. This initiative aligns with Bland.AI’s commitment to delivering cutting-edge no-code solutions, as seen with their Zapier integration, to make powerful AI tools accessible to a broader audience.

Bland.ai Turbo: Bland.AI introduces Turbo, showcasing the fastest conversational AI to date, promising sub-second response times to mimic human speed and interaction quality. The site offers visitors the chance to experience this rapid AI first hand through instant calls, urging users to try it for free. This initiative aligns with Bland.AI’s commitment to delivering cutting-edge no-code solutions, as seen with their Zapier integration, to make powerful AI tools accessible to a broader audience.

#BlandAI #TurboAI #ConversationalAI #NoCode #TechInnovation

MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training: The research paper titled “MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training” discusses the development and implications of high-performance Multimodal Large Language Models (MLLMs). It highlights the critical aspects of architecture and data selection, demonstrating how a mix of different types of data can lead to state-of-the-art results in few-shot learning across various benchmarks. The study also points out the significant impact of image encoders on model performance, suggesting that the design of the vision-language connector is less crucial. This work, published by Brandon McKinzie along with 31 other authors, introduces MM1, a family of up to 30B parameter models that excel in pre-training metrics and competitive performance in multimodal benchmarks.

MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training: The research paper titled “MM1: Methods, Analysis & Insights from Multimodal LLM Pre-training” discusses the development and implications of high-performance Multimodal Large Language Models (MLLMs). It highlights the critical aspects of architecture and data selection, demonstrating how a mix of different types of data can lead to state-of-the-art results in few-shot learning across various benchmarks. The study also points out the significant impact of image encoders on model performance, suggesting that the design of the vision-language connector is less crucial. This work, published by Brandon McKinzie along with 31 other authors, introduces MM1, a family of up to 30B parameter models that excel in pre-training metrics and competitive performance in multimodal benchmarks.

#MultimodalLLM #FewShotLearning #MachineLearning #ComputerVision #AIResearch

Hello OLMo: A truly open LLM: The Allen Institute for AI (AI2) introduces OLMo, a state-of-the-art open Large Language Model (LLM), complete with pre-training data and training code. This move empowers the AI research community, allowing collective advancement in language model science. Built on AI2’s Dolma dataset, OLMo aims for higher precision, reduced carbon footprint, and lasting contributions to open AI development, underscoring the importance of transparency and collaboration in AI advancements.

Hello OLMo: A truly open LLM: The Allen Institute for AI (AI2) introduces OLMo, a state-of-the-art open Large Language Model (LLM), complete with pre-training data and training code. This move empowers the AI research community, allowing collective advancement in language model science. Built on AI2’s Dolma dataset, OLMo aims for higher precision, reduced carbon footprint, and lasting contributions to open AI development, underscoring the importance of transparency and collaboration in AI advancements.

#AI2 #OpenSource #LanguageModel #ArtificialIntelligence #OpenScience

More Agents Is All You Need: Researchers have discovered that the efficiency of large language models (LLMs) can be improved through a straightforward sampling-and-voting method, which scales performance in correlation with the quantity of agents involved. This technique offers a simplication compared to existing complex methods, potentially enhancing LLMs even further depending on the task’s complexity. Conducted experiments across various benchmarks confirm these insights, with the code made available for public access.

More Agents Is All You Need: Researchers have discovered that the efficiency of large language models (LLMs) can be improved through a straightforward sampling-and-voting method, which scales performance in correlation with the quantity of agents involved. This technique offers a simplication compared to existing complex methods, potentially enhancing LLMs even further depending on the task’s complexity. Conducted experiments across various benchmarks confirm these insights, with the code made available for public access.

#AI #LargeLanguageModels #MachineLearning #ResearchInnovation #Technology

How to unleash the power of AI, with Ethan Mollick: Ethan Mollick features in a YouTube video discussing the power of AI and its implications. Despite some audio issues in the video, an audio-only version is available for better sound quality. Hosted by Azeem Azhar, the video delves into the technical and societal impacts of AI.

How to unleash the power of AI, with Ethan Mollick: Ethan Mollick features in a YouTube video discussing the power of AI and its implications. Despite some audio issues in the video, an audio-only version is available for better sound quality. Hosted by Azeem Azhar, the video delves into the technical and societal impacts of AI.

#AI #EthanMollick #Technology #YouTube #AzeemAzhar

Embeddings are a good starting point for the AI curious app developer: The article delves into the world of vector embeddings from a practical standpoint, urging app developers to explore this technology to improve search experiences. By sharing the journey of incorporating vector embeddings into projects, it highlights the simplicity and transformative potential they bring to search functionalities. The article discusses choosing tools like Pgvector for seamless integration with existing databases and provides insights into embedding creation, search implementation, and the enhancements these techniques offer for app development. The shared experience underscores embeddings as a robust starting point for developers keen on integrating AI features into their solutions.

Embeddings are a good starting point for the AI curious app developer: The article delves into the world of vector embeddings from a practical standpoint, urging app developers to explore this technology to improve search experiences. By sharing the journey of incorporating vector embeddings into projects, it highlights the simplicity and transformative potential they bring to search functionalities. The article discusses choosing tools like Pgvector for seamless integration with existing databases and provides insights into embedding creation, search implementation, and the enhancements these techniques offer for app development. The shared experience underscores embeddings as a robust starting point for developers keen on integrating AI features into their solutions.

#VectorEmbeddings #AI #AppDevelopment #SearchFunctionality #TechInsights

Looking for AI Use Cases: Benedict Evans, a tech blogger, explores the current frontier of artificial intelligence (AI) application, particularly looking at the specificity and universality of AI use cases. He reflects on the evolution of technology tools from PCs to present-day chatbots and language learning models (LLMs), examining how certain technologies found their killer applications while others search for a fit. Generative AI’s potential to transform manual tasks into software processes without the need for pre-written software for each task is highlighted, alongside the challenges and implications of making AI tools that can genuinely address a broad range of use cases effectively.

Looking for AI Use Cases: Benedict Evans, a tech blogger, explores the current frontier of artificial intelligence (AI) application, particularly looking at the specificity and universality of AI use cases. He reflects on the evolution of technology tools from PCs to present-day chatbots and language learning models (LLMs), examining how certain technologies found their killer applications while others search for a fit. Generative AI’s potential to transform manual tasks into software processes without the need for pre-written software for each task is highlighted, alongside the challenges and implications of making AI tools that can genuinely address a broad range of use cases effectively.

#AI #TechnologyEvolution #GenerativeAI #SoftwareAutomation #BenedictEvans

Cheat Sheet: 5 prompt frameworks to level up your prompts

: This cheat sheet provides five frameworks—RTF, RISEN, RODES, Chain of Thought, and Chain of Density—that help enhance the effectiveness of prompts given to ChatGPT, each suited for specific tasks like decision making, blog writing, or marketing content creation.

Cheat Sheet: 5 prompt frameworks to level up your prompts

: This cheat sheet provides five frameworks—RTF, RISEN, RODES, Chain of Thought, and Chain of Density—that help enhance the effectiveness of prompts given to ChatGPT, each suited for specific tasks like decision making, blog writing, or marketing content creation.

#ChatGPT #PromptingGuide #AI #ContentCreation #DigitalMarketing

Here’s Proof You Can Train an AI Model Without Slurping Copyrighted Content: This article challenges the previously held belief by OpenAI that creating useful AI models without utilizing copyrighted material is impractical. Recent developments, including a large AI dataset of public domain text and an ethically created large language model (KL3M), showcase the potential for building powerful AI systems without breaching copyright laws. These advancements not only offer a cleaner route for AI development but also open the door for more responsible use of data in training AI models. French researchers and the nonprofit Fairly Trained are at the forefront of this shift, aiming to set a new standard in the AI industry.

Here’s Proof You Can Train an AI Model Without Slurping Copyrighted Content: This article challenges the previously held belief by OpenAI that creating useful AI models without utilizing copyrighted material is impractical. Recent developments, including a large AI dataset of public domain text and an ethically created large language model (KL3M), showcase the potential for building powerful AI systems without breaching copyright laws. These advancements not only offer a cleaner route for AI development but also open the door for more responsible use of data in training AI models. French researchers and the nonprofit Fairly Trained are at the forefront of this shift, aiming to set a new standard in the AI industry.

#AI #EthicalAI #OpenAI #Copyright #TechInnovation

Screen Recording to Code: “Screen Recording to Code” is an experimental feature leveraging AI to transform screen recordings of websites or apps into functional prototypes. This innovative approach automates workflow but comes with a cost, urging users to set usage limits to avoid excessive charges. Its utility spans various applications, offering examples like Google’s in-app functionalities, multi-step forms, and ChatGPT interactions, demonstrating its potential to streamline prototype development.

Screen Recording to Code: “Screen Recording to Code” is an experimental feature leveraging AI to transform screen recordings of websites or apps into functional prototypes. This innovative approach automates workflow but comes with a cost, urging users to set usage limits to avoid excessive charges. Its utility spans various applications, offering examples like Google’s in-app functionalities, multi-step forms, and ChatGPT interactions, demonstrating its potential to streamline prototype development.

#AI #WebDevelopment #ScreenRecording #Prototyping #TechInnovation

Emergent Mind: This is a platform focused on aggregating and presenting trending AI research papers. Users can navigate various categories, set timeframes to find trending or top papers, and engage with content through social media metrics like likes, Reddit points, and more. The platform offers options to filter content based on categories, publication timeframe, and offers functionality like subscribing by email, signing up for updates, and following on Twitter for summaries of trending AI papers.

Emergent Mind: This is a platform focused on aggregating and presenting trending AI research papers. Users can navigate various categories, set timeframes to find trending or top papers, and engage with content through social media metrics like likes, Reddit points, and more. The platform offers options to filter content based on categories, publication timeframe, and offers functionality like subscribing by email, signing up for updates, and following on Twitter for summaries of trending AI papers.

#AI #ResearchPapers #EmergentMind #Technology #AcademicResearch

Adobe Is Buying Videos for $3 Per Minute to Build AI Model: Adobe Inc. is actively gathering videos to enhance its AI text-to-video generator, aiming to stay competitive with OpenAI’s advancements in similar tech. By incentivising its community of creators, Adobe is offering $120 for videos showcasing common human activities to aid in AI training. This strategic move highlights the growing industry focus on creating more dynamic and realistic AI-generated content.

Adobe Is Buying Videos for $3 Per Minute to Build AI Model: Adobe Inc. is actively gathering videos to enhance its AI text-to-video generator, aiming to stay competitive with OpenAI’s advancements in similar tech. By incentivising its community of creators, Adobe is offering $120 for videos showcasing common human activities to aid in AI training. This strategic move highlights the growing industry focus on creating more dynamic and realistic AI-generated content.

#Adobe #ArtificialIntelligence #AI #TechNews #OpenAI

Accelerating AI Image Generation with MIT’s Novel Framework: Researchers from MIT CSAIL have introduced a groundbreaking AI image-generating method that simplifies the traditional process to a single step, accelerating the speed by 30 times without compromising image quality. This new framework, utilizing a teacher-student model, enhances both efficiency and output fidelity, bridging the gap between generative adversarial networks (GANs) and diffusion models. It’s a significant advancement in generative modeling, offering faster content creation with potential applications in diverse fields like design, drug discovery, and 3D modeling.

Accelerating AI Image Generation with MIT’s Novel Framework: Researchers from MIT CSAIL have introduced a groundbreaking AI image-generating method that simplifies the traditional process to a single step, accelerating the speed by 30 times without compromising image quality. This new framework, utilizing a teacher-student model, enhances both efficiency and output fidelity, bridging the gap between generative adversarial networks (GANs) and diffusion models. It’s a significant advancement in generative modeling, offering faster content creation with potential applications in diverse fields like design, drug discovery, and 3D modeling.

#MIT #CSAIL #AI #ImageGeneration #Technology

Financial Market Applications of LLMs: This article explores the intersection of Large Language Models (LLMs) and financial markets, focusing on their potential to transform quantitative trading. It discusses how LLMs, known for their prowess in language-related tasks, are being considered for price and trade prediction in the financial sector. The comparison of data volume between GPT-3 training and stock market data, multi-modal AI applications in finance, and the concept of ‘residualisation’ in both AI and finance are highlighted. The piece also delves into challenges such as the unpredictability of market data and the potential for creating synthetic data to enhance trading strategies.

Financial Market Applications of LLMs: This article explores the intersection of Large Language Models (LLMs) and financial markets, focusing on their potential to transform quantitative trading. It discusses how LLMs, known for their prowess in language-related tasks, are being considered for price and trade prediction in the financial sector. The comparison of data volume between GPT-3 training and stock market data, multi-modal AI applications in finance, and the concept of ‘residualisation’ in both AI and finance are highlighted. The piece also delves into challenges such as the unpredictability of market data and the potential for creating synthetic data to enhance trading strategies.

#LLMs #FinancialMarkets #QuantitativeTrading #AI #MultiModalAI

Generative AI is still a solution in search of a problem: Generative AI tools like ChatGPT and Google Gemini are stirring excitement in tech industries but face scepticism for their inconsistent accuracy and perceived limited practicality, with critics questioning their broad utility beyond niche applications.

Generative AI is still a solution in search of a problem: Generative AI tools like ChatGPT and Google Gemini are stirring excitement in tech industries but face scepticism for their inconsistent accuracy and perceived limited practicality, with critics questioning their broad utility beyond niche applications.

#GenerativeAI #ChatGPT #GoogleGemini #AITrends #TechCritique

Regards, M@

[ED: If you’d like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com.

Also cross-published on Medium and Slideshare: