QFM028: Irresponsible AI Reading List July 2024

Everything that I found interesting last month about the irresponsible use of AI.

Tags: qfm, irresponsible, ai, reading, list, july, 2024

Photo by Florida-Guidebook.com on Unsplash

Photo by Florida-Guidebook.com on Unsplash

The July edition of the Irresponsible AI Reading List starts with a critique of AI’s potentially overblown promises, as highlighted in Edward Zitron’s Put Up Or Shut Up. Zitron vociferously condemns the tech industry’s trend of promoting exaggerated claims about AI capabilities, noting that recent announcements, such as those from Lattice and OpenAI, often lack practical substance and evidence. His scepticism is echoed by discussions on outdated benchmark tests, which, according to Everyone Is Judging AI by These Tests, fail to accurately assess the nuanced capabilities of modern AI.

The misinformation theme extends to the realm of AI summarization. In When ChatGPT summarises, it actually does nothing of the kind, the limitations of ChatGPT in providing accurate summaries are examined, revealing that while it can shorten texts, it often misrepresents or omits key information due to its lack of genuine understanding.

Bias remains a critical concern, especially in high-stakes fields like medical diagnostics. A study on AI models analyzing medical images highlights significant biases against certain demographic groups, pointing to the limitations of current debiasing techniques and the broader issue of fairness in AI. Similarly, Surprising gender biases in GPT show how GPT language models can perpetuate traditional gender stereotypes, underscoring the ongoing need for improved bias mitigation strategies in AI systems.

The legal and ethical dimensions of AI are significant. The dismissal of most claims in the lawsuit against GitHub Copilot, as detailed in Coders’ Copilot code-copying copyright claims crumble, reveals the complexities of intellectual property issues in AI training practices. Meanwhile, the historical perspective provided by The Troubled Development of Mass Exposure offers insights into the long-standing struggle between technological advancement and privacy rights, drawing parallels to current AI-powered concerns about data use and consent.

Lastly, ChatBug: Tricking AI Models into Harmful Responses presents a critical vulnerability in AI safety, highlighting how specific attacks can exploit weaknesses in AI’s instruction tuning to produce harmful outputs.

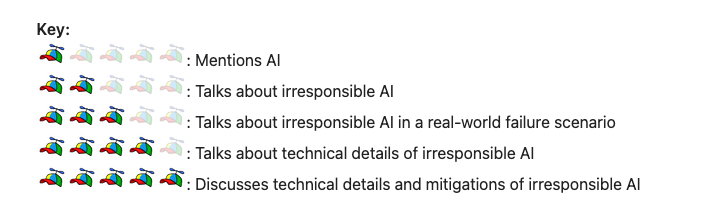

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

The Troubled Development of Mass Exposure: The advent of photography in the 1880s democratized the art, but it also spurred a movement concerned with the right to privacy. The Kodak camera, introduced in 1888, made photography widespread and accessible, leading to issues like image rights and unauthorized use. The article explores historical cases, including one where a woman’s portrait was used without consent in a medicine ad, and the societal response to these privacy invasions. Legislation slowly developed to address these issues, highlighting the ongoing struggle between technology and privacy.

The Troubled Development of Mass Exposure: The advent of photography in the 1880s democratized the art, but it also spurred a movement concerned with the right to privacy. The Kodak camera, introduced in 1888, made photography widespread and accessible, leading to issues like image rights and unauthorized use. The article explores historical cases, including one where a woman’s portrait was used without consent in a medicine ad, and the societal response to these privacy invasions. Legislation slowly developed to address these issues, highlighting the ongoing struggle between technology and privacy.

#Photography #Kodak #Privacy #History #Law

GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models: This paper investigates the potential impacts of large language models (LLMs) like GPTs on the U.S. labor market. The authors estimate that around 80% of the U.S. workforce could have at least 10% of their work tasks affected by LLMs, while around 19% may see at least 50% of their tasks impacted. The study also suggests that higher-income jobs may face greater exposure to LLM capabilities, with significant implications for economic, social, and policy aspects.

GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models: This paper investigates the potential impacts of large language models (LLMs) like GPTs on the U.S. labor market. The authors estimate that around 80% of the U.S. workforce could have at least 10% of their work tasks affected by LLMs, while around 19% may see at least 50% of their tasks impacted. The study also suggests that higher-income jobs may face greater exposure to LLM capabilities, with significant implications for economic, social, and policy aspects.

#LLMs #GPTs #LaborMarket #AI #Economics

Coders’ Copilot code-copying copyright claims crumble against GitHub, Microsoft: Developers’ claims that GitHub Copilot was unlawfully copying their code have largely been dismissed, with only two allegations remaining in their lawsuit against GitHub, Microsoft, and OpenAI. The lawsuit, filed in November 2022, contended that Copilot was trained on open source software and violated the original creators’ intellectual property rights. Most of the claims have been thrown out, including an important one under the DMCA, leaving just a couple of allegations still standing, both related to license violations and breach of contract.

Coders’ Copilot code-copying copyright claims crumble against GitHub, Microsoft: Developers’ claims that GitHub Copilot was unlawfully copying their code have largely been dismissed, with only two allegations remaining in their lawsuit against GitHub, Microsoft, and OpenAI. The lawsuit, filed in November 2022, contended that Copilot was trained on open source software and violated the original creators’ intellectual property rights. Most of the claims have been thrown out, including an important one under the DMCA, leaving just a couple of allegations still standing, both related to license violations and breach of contract.

#GitHub #Copilot #Microsoft #OpenAI #Lawsuit

Surprising gender biases in GPT: The article examines the existence of gender biases in GPT language models, revealing that these models often reinforce traditional gender stereotypes and exhibit unequal treatment of different genders. The findings underscore the need for improved measures to mitigate bias in AI systems to ensure fair and equitable outcomes.

Surprising gender biases in GPT: The article examines the existence of gender biases in GPT language models, revealing that these models often reinforce traditional gender stereotypes and exhibit unequal treatment of different genders. The findings underscore the need for improved measures to mitigate bias in AI systems to ensure fair and equitable outcomes.

#GenderBias #AI #GPT #BiasInAI #Technology

Put Up Or Shut Up: In his article ‘Put Up Or Shut Up’, Edward Zitron criticizes the tech industry’s current trend of hyping up AI with empty promises and meaningless marketing. He particularly focuses on Lattice’s recent announcement about employing AI ‘digital workers’, labeling the idea as nonsensical and disconnected from practical use, and criticizes OpenAI’s questionable claims about progressing towards Artificial General Intelligence (AGI). Zitron calls for more scrutiny and skepticism when dealing with flashy tech advancements that lack substantial evidence or practical application.

Put Up Or Shut Up: In his article ‘Put Up Or Shut Up’, Edward Zitron criticizes the tech industry’s current trend of hyping up AI with empty promises and meaningless marketing. He particularly focuses on Lattice’s recent announcement about employing AI ‘digital workers’, labeling the idea as nonsensical and disconnected from practical use, and criticizes OpenAI’s questionable claims about progressing towards Artificial General Intelligence (AGI). Zitron calls for more scrutiny and skepticism when dealing with flashy tech advancements that lack substantial evidence or practical application.

#AI #TechIndustry #OpenAI #DigitalWorkers #Innovation

ChatBug: Tricking AI Models into Harmful Responses: Researchers from the University of Washington and the Allen Institute for AI have discovered a vulnerability in the safety alignment of large language models (LLMs) like GPT, Llama, and Claude. Known as ‘ChatBug,’ this vulnerability exploits the chat templates used for instruction tuning. Attacks such as format mismatch and message overflow can trick LLMs into producing harmful responses. The research highlights the difficulty in balancing safety and performance in AI systems and calls for improved safety measures in instruction tuning.

ChatBug: Tricking AI Models into Harmful Responses: Researchers from the University of Washington and the Allen Institute for AI have discovered a vulnerability in the safety alignment of large language models (LLMs) like GPT, Llama, and Claude. Known as ‘ChatBug,’ this vulnerability exploits the chat templates used for instruction tuning. Attacks such as format mismatch and message overflow can trick LLMs into producing harmful responses. The research highlights the difficulty in balancing safety and performance in AI systems and calls for improved safety measures in instruction tuning.

#AI #LLM #Cybersecurity #MachineLearning #ArtificialIntelligence

Study reveals why AI models that analyze medical images can be biased: AI models are crucial in analyzing medical images like X-rays for diagnostics. However, these models often show biases, particularly towards certain demographic groups, such as women and people of color. MIT researchers have demonstrated that the most accurate AI models for demographic predictions also show the largest fairness gaps, highlighting how these models use demographic shortcuts, leading to inaccuracies. While debiasing techniques can improve fairness on the same data sets they were trained on, these improvements don’t always transfer when applied to new data sets from different hospitals.

Study reveals why AI models that analyze medical images can be biased: AI models are crucial in analyzing medical images like X-rays for diagnostics. However, these models often show biases, particularly towards certain demographic groups, such as women and people of color. MIT researchers have demonstrated that the most accurate AI models for demographic predictions also show the largest fairness gaps, highlighting how these models use demographic shortcuts, leading to inaccuracies. While debiasing techniques can improve fairness on the same data sets they were trained on, these improvements don’t always transfer when applied to new data sets from different hospitals.

#AI #MedicalImaging #Bias #HealthcareInnovation #MIT

When ChatGPT summarises, it actually does nothing of the kind: The article explores the limitations of ChatGPT in summarising texts. Through various examples, the author explains how ChatGPT often fails to accurately capture the core ideas of long documents, instead producing shortened versions that miss key points and sometimes fabricate information. The article concludes that ChatGPT doesn’t truly understand the content it is summarising, leading to unreliable summaries. The author suggests that while ChatGPT can shorten texts, it lacks the deeper understanding needed for genuine summarisation.

When ChatGPT summarises, it actually does nothing of the kind: The article explores the limitations of ChatGPT in summarising texts. Through various examples, the author explains how ChatGPT often fails to accurately capture the core ideas of long documents, instead producing shortened versions that miss key points and sometimes fabricate information. The article concludes that ChatGPT doesn’t truly understand the content it is summarising, leading to unreliable summaries. The author suggests that while ChatGPT can shorten texts, it lacks the deeper understanding needed for genuine summarisation.

#AI #ChatGPT #LLMs #Technology #GenerativeAI

Everyone Is Judging AI by These Tests. But Experts Say They’re Close to Meaningless: Experts argue that the benchmark tests widely used to evaluate AI performance are outdated and lack validity. These tests, often sourced from amateur websites and designed for simpler models, fail to measure the nuances of newer AI systems’ capabilities. Additionally, marketing of AI tools using these benchmarks can mislead users about their true functionality, raising concerns especially in high-stakes domains like healthcare and law.

Everyone Is Judging AI by These Tests. But Experts Say They’re Close to Meaningless: Experts argue that the benchmark tests widely used to evaluate AI performance are outdated and lack validity. These tests, often sourced from amateur websites and designed for simpler models, fail to measure the nuances of newer AI systems’ capabilities. Additionally, marketing of AI tools using these benchmarks can mislead users about their true functionality, raising concerns especially in high-stakes domains like healthcare and law.

#AI #MachineLearning #TechNews #Benchmarking #AITesting

Regards,

[ED: If you’d like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com. Also available on Medium.