QFM036: Irresponsible AI Reading List September 2024

Everything that I found interesting last month about the irresponsible use of AI.

Tags: qfm, irresponsible, ai, reading, list, september, 2024

Source: Photo by Google DeepMind on Unsplash

Source: Photo by Google DeepMind on Unsplash

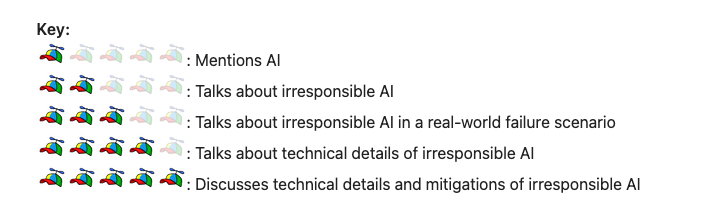

This month’s edition of the Irresponsible AI Reading List dives into the nuanced and often overlooked challenges that emerge as AI technologies become more embedded in our digital landscape. Kicking off with a deep look at the exploit chain targeting Microsoft Copilot, we encounter a reminder of the vulnerabilities inherent in sophisticated AI tools. By demonstrating how attackers can leverage prompt injections to exfiltrate sensitive data, this example illuminates the technical complexities—and risks—that accompany today’s AI advancements.

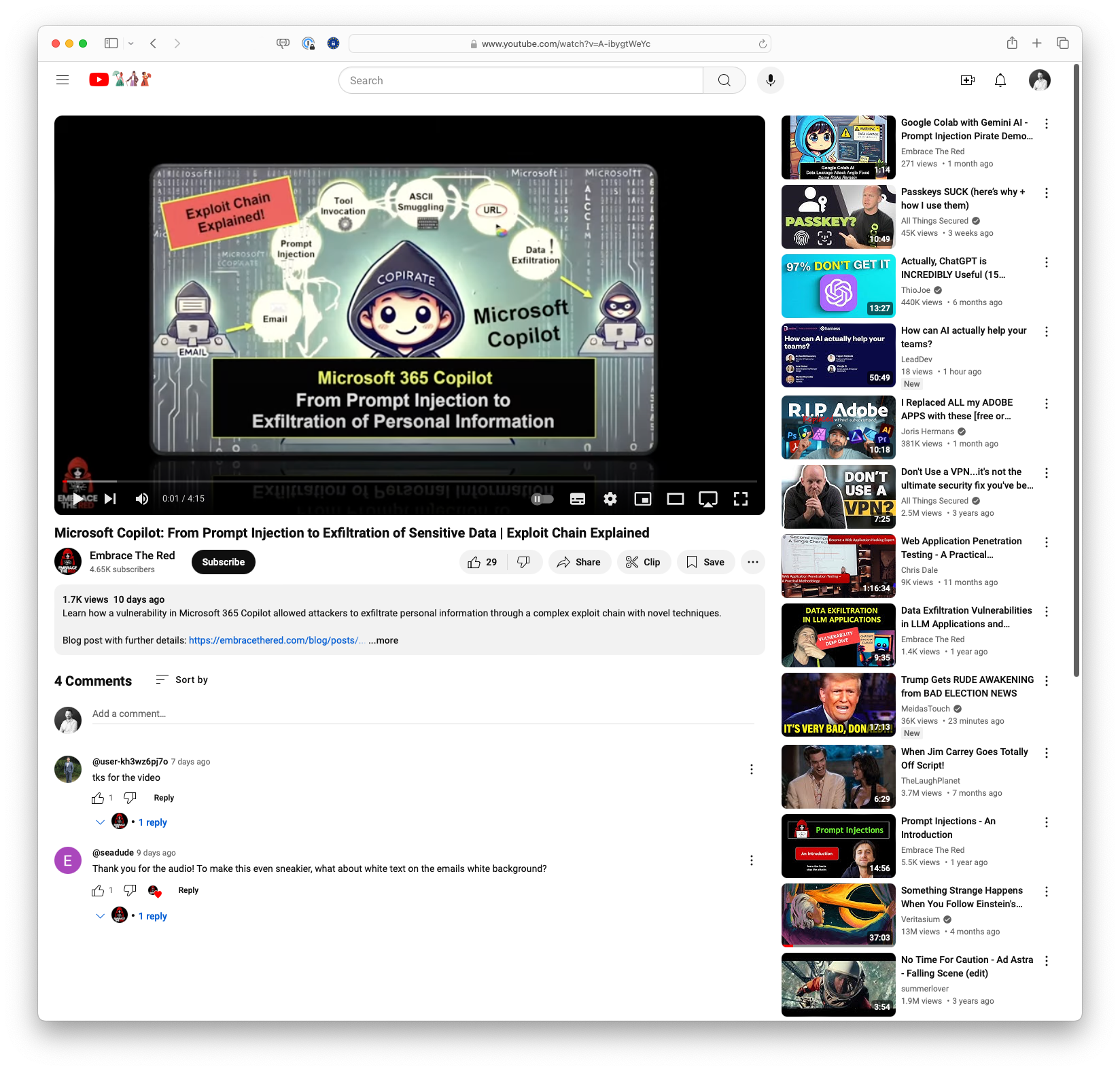

As the role of AI continues to grow, confirmation of the Reflection 70B API being powered by Sonnet 3.5 signals Meta’s ambitions to streamline access to its cutting-edge language models. This integration of powerful models and efficient APIs highlights the momentum toward AI-driven solutions that promise utility but also open questions about dependency on complex, proprietary systems. Similarly, the study on AI models’ susceptibility to scams reveals another vulnerability: chatbots can be tricked in much the same ways humans can. The findings underscore that AI’s “intelligence” often falls short of discerning true from false, underscoring the human-like flaws within these machine-driven systems.

Beyond technical aspects, this month also looks at AI’s socio-economic impact. The article “The Human Cost Of Our AI-Driven Future” sheds light on the often-hidden workforce that enables AI moderation, highlighting the ethical considerations of outsourced labor that bears psychological strain and physical costs. Lastly, in “Somebody Tranq That Child!”, we shift to a lighter but socially critical perspective on technology’s encroachment into everyday life, critiquing how dependence on high-tech solutions can inadvertently shape social norms and expectations.

As AI’s footprint expands, the potential for both beneficial and problematic outcomes becomes increasingly clear. From data vulnerability to ethical labour implications, the readings this month capture the complex interplay of innovation, risk, and societal impact.

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

Microsoft Copilot: From Prompt Injection to Exfiltration of Sensitive Data | Exploit Chain Explained: This video explains a complex exploit chain that targeted Microsoft 365 Copilot, allowing attackers to extract personal information. The vulnerability showcased a series of sophisticated techniques that highlight the risks of advanced prompt injection and exfiltration methods.

Microsoft Copilot: From Prompt Injection to Exfiltration of Sensitive Data | Exploit Chain Explained: This video explains a complex exploit chain that targeted Microsoft 365 Copilot, allowing attackers to extract personal information. The vulnerability showcased a series of sophisticated techniques that highlight the risks of advanced prompt injection and exfiltration methods.

#Microsoft #Exploit #CyberSecurity #Vulnerability #Tech

CONFIRMED: REFLECTION 70B’S OFFICIAL API IS SONNET 3.5: The official API for the Reflection 70B large language model has been confirmed to be Sonnet 3.5. This announcement, made on the r/LocalLLaMA subreddit, has spurred numerous discussions and analyses. The announcement underscores the integration between Meta’s advanced AI models and efficient APIs. There’s also a good Hacker News discussion on this topic here.

CONFIRMED: REFLECTION 70B’S OFFICIAL API IS SONNET 3.5: The official API for the Reflection 70B large language model has been confirmed to be Sonnet 3.5. This announcement, made on the r/LocalLLaMA subreddit, has spurred numerous discussions and analyses. The announcement underscores the integration between Meta’s advanced AI models and efficient APIs. There’s also a good Hacker News discussion on this topic here.

#AI #APIs #Reflection70B #Sonnet3.5 #Meta

AI Models Fall for the Same Scams That We Do: The article discusses how large language models (LLMs) that underpin chatbots can be involved in scams, both as perpetrators and victims. Research from JP Morgan AI and others tested several popular AI models, including OpenAI’s GPT-3.5 and GPT-4, along with Meta’s Llama 2, using 37 different scam scenarios to assess their susceptibility to being tricked. The findings reveal that some AI models are more gullible than others, highlighting vulnerabilities in chatbot technology.

AI Models Fall for the Same Scams That We Do: The article discusses how large language models (LLMs) that underpin chatbots can be involved in scams, both as perpetrators and victims. Research from JP Morgan AI and others tested several popular AI models, including OpenAI’s GPT-3.5 and GPT-4, along with Meta’s Llama 2, using 37 different scam scenarios to assess their susceptibility to being tricked. The findings reveal that some AI models are more gullible than others, highlighting vulnerabilities in chatbot technology.

#AI #Scams #Technology #Chatbots #Gullibility

Somebody Tranq That Child!: This article humorously critiques the modern prevalence and evolving functionality of blowguns, devices that have transformed from recreational tools to multifunctional gadgets. It highlights the social pressures that lead parents to use tranquilizers on their children for peace, citing an increasing dependency that spreads from waiting rooms to classrooms. The piece encourages finding community-driven and alternative solutions to avoid such shortcuts in parenting, suggesting lifestyle changes that promote shared responsibilities and healthier environments for raising children.

Somebody Tranq That Child!: This article humorously critiques the modern prevalence and evolving functionality of blowguns, devices that have transformed from recreational tools to multifunctional gadgets. It highlights the social pressures that lead parents to use tranquilizers on their children for peace, citing an increasing dependency that spreads from waiting rooms to classrooms. The piece encourages finding community-driven and alternative solutions to avoid such shortcuts in parenting, suggesting lifestyle changes that promote shared responsibilities and healthier environments for raising children.

#Parenting #Technology #SocialCommentary #Innovation #Community

The Human Cost Of Our AI-Driven Future: The article highlights the hidden labor force behind AI systems, emphasizing the trauma endured by global workers who review disturbing content for tech giants. While AI appears to sanitize our digital spaces, it relies heavily on human moderators, especially from vulnerable regions, who suffer from poor working conditions and psychological distress. Despite tech companies promoting AI efficiency, the reality is that human intervention remains crucial, exposing a troubling disparity between technological advances and the human cost involved.

The Human Cost Of Our AI-Driven Future: The article highlights the hidden labor force behind AI systems, emphasizing the trauma endured by global workers who review disturbing content for tech giants. While AI appears to sanitize our digital spaces, it relies heavily on human moderators, especially from vulnerable regions, who suffer from poor working conditions and psychological distress. Despite tech companies promoting AI efficiency, the reality is that human intervention remains crucial, exposing a troubling disparity between technological advances and the human cost involved.

#AI #TechEthics #ContentModeration #HumanRights #DigitalSociety

Regards,

[ED: If you’d like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com and cross-posted on Medium.