QFM024: Irresponsible Ai Reading List - June 2024

Source: Photo by Thomas Kinto on Unsplash

Source: Photo by Thomas Kinto on Unsplash

The June edition of the Irresponsible AI Reading List starts with a critique of AI's potential for exaggerated promises in science and academia then heads over to look at the societal implications of AI-driven job automation and privacy concerns. An ongoing theme emerges for the urgent need for critical, informed engagement with AI technologies.

We then take a look at the role that AI plays in amplifying societal biases, privacy rights concerning AI profiling, and the mental health impacts of AI-induced job insecurity. The discussion on Meta's use of facial recognition for age verification highlights the tension between technological advancements and privacy rights, while Citigroup's report on AI's potential to automate over half of banking jobs illustrates the profound economic shifts underway in the job market.

ChatBug: Tricking AI Models into Harmful Responses looks at how AI model vulnerabilities can lead to harmful exploitation, pointing to the critical need for even more robust cybersecurity measures when working with AI.

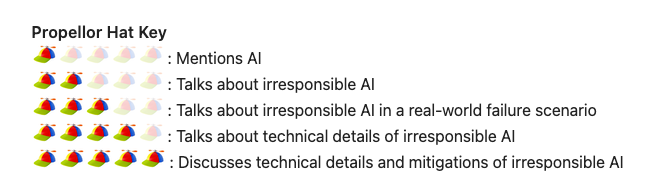

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

Links

In this article, the author addresses a crucial question regarding the major challenges faced by the tech industry. They highlight the anxiety and mental health issues stemming from layoffs and fears of AI replacing programmers. The piece advises how leaders can mitigate these anxieties through open communication, positivity, and leveraging new technologies effectively.

Regards,

M@

[ED: If you'd like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com and cross-posted on Medium.

hello@matthewsinclair.com | matthewsinclair.com | bsky.app/@matthewsinclair.com | masto.ai/@matthewsinclair | medium.com/@matthewsinclair | xitter/@matthewsinclair

Was this useful?