QFM044: Irresponsible Ai Reading List - November 2024

Source: Photo by Kind and Curious on Unsplash

Source: Photo by Kind and Curious on Unsplash

The November edition of the Irresponsible AI Reading List opens with Despite Its Impressive Output, Generative AI Doesn’t Have a Coherent Understanding of the World, MIT researchers highlight a core limitation of AI: a lack of true world understanding. While large language models (LLMs) can produce human-like responses, they falter when deeper comprehension or adaptability is required. This disconnect underscores AI’s dependency on static data, failing to meet the expectations of a tool that can truly “think.”

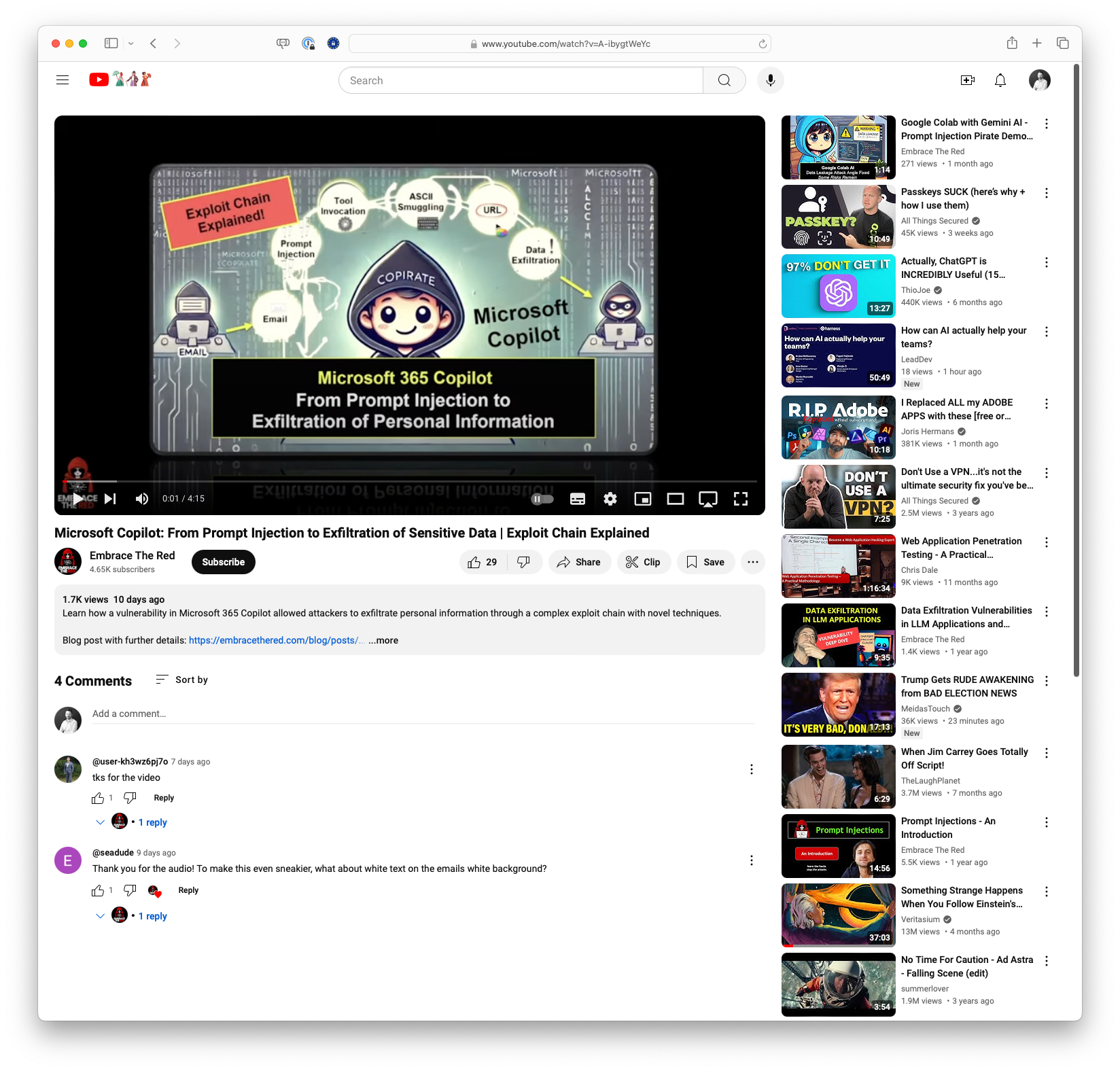

The theme of inflated expectations is echoed in YC is Wrong about LLMs for Chip Design, which critiques the belief that LLMs can revolutionise complex engineering fields like chip design. While AI tools may assist in reducing costs and aiding verification tasks, they fall short of replicating the nuanced expertise of skilled engineers. Similarly, AI Makes Tech Debt More Expensive reframes the assumption that AI alleviates development challenges, arguing that AI actually amplifies the cost of technical debt. Codebases burdened with legacy issues struggle to harness AI’s potential, highlighting the need for clean architecture to unlock its benefits.

As AI increasingly enters the workplace, the question of its societal impact grows sharper. How AI Could Break the Career Ladder addresses a troubling trend: the automation of entry-level jobs. These roles, often critical for skill-building and career progression, risk being replaced by AI systems, potentially creating industries with hollowed-out career pipelines. This concern is mirrored in The Tech Utopia Fantasy is Over, which critiques the long-held belief that technological advancement would deliver universally positive outcomes. Instead, the article examines how tech solutions often exacerbate societal inequalities and environmental concerns.

In creative fields, Anti-scale: a response to AI in journalism suggests that generative AI may undermine trust and authenticity in journalism. By producing plausible but potentially misleading content, AI highlights the need for human-driven, community-focused approaches to foster genuine engagement. This emphasis on human connection provides a counterpoint to the efficiency-focused narratives often driving AI adoption.

Finally, Manna – Two Views of Humanity’s Future – Chapter 1 offers a speculative lens on the societal implications of AI and automation, imagining a world where software micromanages labour to maximise efficiency. While fictional, the narrative forces readers to consider the trade-offs of such systems in terms of agency, equity, and humanity.

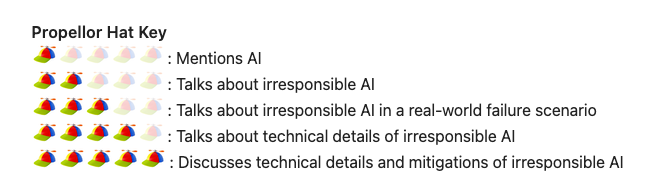

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

Links

Regards,

M@

[ED: If you'd like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com and cross-posted on Medium.

hello@matthewsinclair.com | matthewsinclair.com | bsky.app/@matthewsinclair.com | masto.ai/@matthewsinclair | medium.com/@matthewsinclair | xitter/@matthewsinclair

Was this useful?