QFM056: Irresponsible Ai Reading List - February 2025

Source: Photo by Brett Jordan on Unsplash

Source: Photo by Brett Jordan on Unsplash

We start this month's Irresponsible AI Reading List with Baldur Bjarnason's The LLMentalist Effect offering a compelling comparison between LLMs and cold reading techniques used by psychics. The article examines why we perceive intelligence in these systems when they're merely applying statistical patterns—creating what amounts to a technological illusion that convinces users of a non-existent understanding. This psychological mechanism helps explain the widespread belief in AI capabilities that may not actually exist.

This theme of perception versus reality continues in Edward Zitron's The Generative AI Con, which criticises the disconnect between AI industry hype and practical utility. Zitron questions whether the millions of reported users translate to meaningful integration in our daily lives or sustainable business models, suggesting the AI industry may be building on unstable financial foundations.

The discourse around AI's future capabilities receives critical examination in Jack Morris's Please Stop Talking About AGI, which argues that our fixation on hypothetical future superintelligence diverts attention from pressing present-day AI challenges. Morris suggests this misdirection of focus hampers our ability to address the immediate ethical considerations of existing AI technologies.

Miles Brundage adds practical perspective in Some Very Important Things (That I Won't Be Working On This Year), outlining critical AI domains requiring attention: AI safety awareness, technical infrastructure for AI agents, economic impacts, regulatory frameworks, and AI literacy. Each represents a vital area where engagement is needed to navigate both challenges and opportunities in our evolving AI landscape.

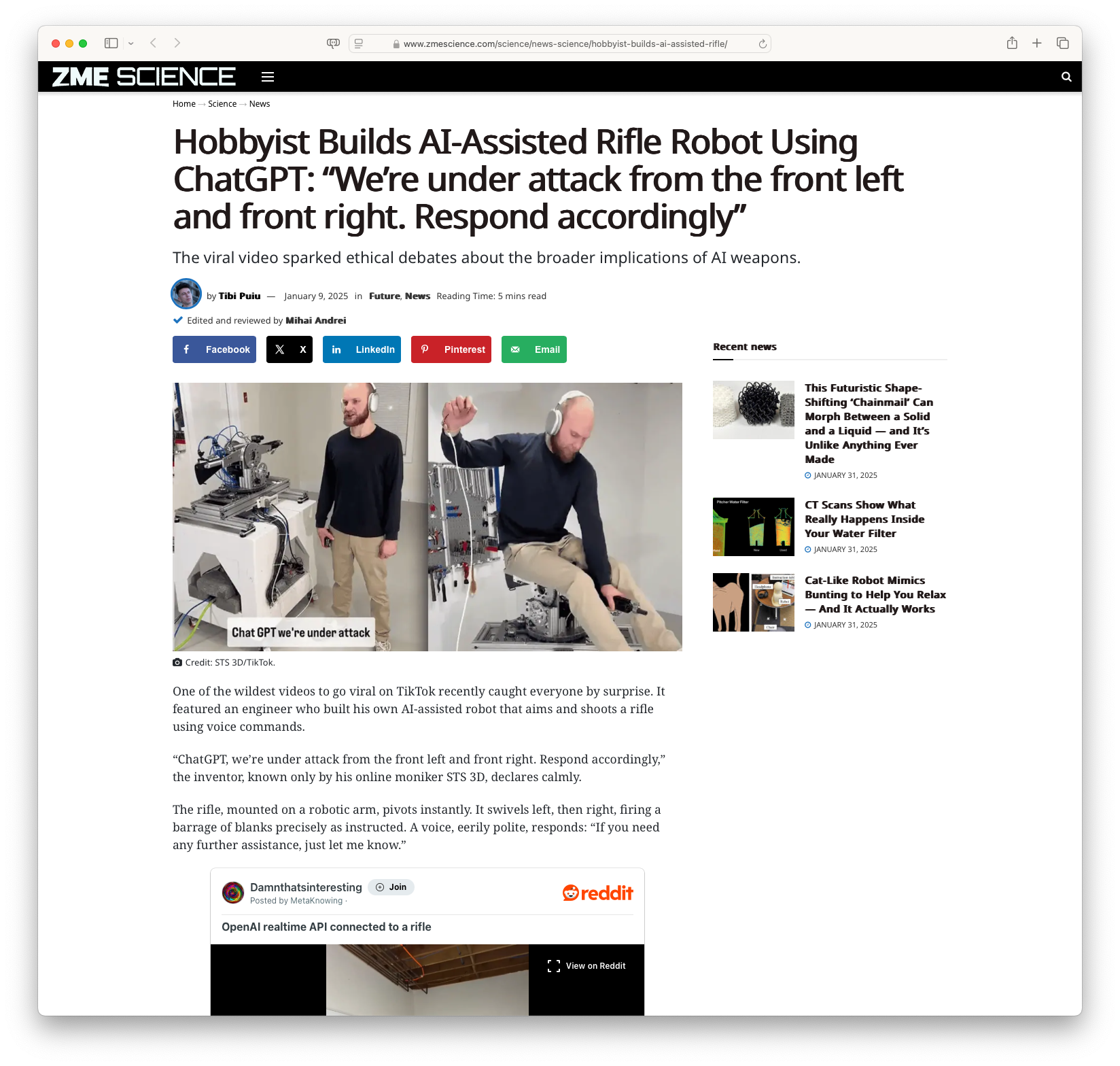

The most concerning applications of AI emerge in Juan Sebastián Pinto's The Guernica of AI, which draws alarming parallels between historical warfare and contemporary AI-powered military technologies. Drawing from personal experience at Palantir, Pinto examines how AI surveillance systems are reshaping modern conflict and civilian life through pervasive data control, raising profound ethical questions about the human consequences of militarised AI.

The dual nature of AI technologies is further explored in Modern-Day Oracles or Bullshit Machines?, which characterises LLMs as both transformative accessibility tools and potential misinformation vectors. The article compares these systems to revolutionary inventions like the printing press while warning of their unprecedented capacity to propagate falsehoods, emphasising the need for critical digital literacy in navigating their outputs.

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

Links

Regards,

M@

[ED: If you'd like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com and cross-posted on Medium.

hello@matthewsinclair.com | matthewsinclair.com | bsky.app/@matthewsinclair.com | masto.ai/@matthewsinclair | medium.com/@matthewsinclair | xitter/@matthewsinclair

Was this useful?