QFM012: Irresponsible AI Reading List March 2024

Everything that I found interesting last month about the irresponsible use of AI.

Tags: qfm, irresponsible, ai, reading, list, march, 2024

This month’s Quantum Fax Machine: Irresponsible AI Reading List explores themes ranging from cybersecurity and digital deception to the broader societal implications and ethical considerations of AI technology.

In an innovative take on productivity, the tweet “I finally figured out how to skip daily stand-ups” reveals how someone crafted an AI clone to attend daily stand-up meetings, merging AI into their everyday work life and raising questions about the future of workplace presence and productivity.

Meanwhile, Bruce Schneier’s discussion on a LLM Prompt Injection Worm highlights the cybersecurity threats inherent in generative AI, where a worm spreads through AI systems without user interaction, suggesting the potential for new forms of digital vulnerability.

Further exploring AI’s darker potential, ArtPrompt: ASCII Art-based Jailbreak Attacks against Aligned LLMs introduces a method to manipulate AI using ASCII art, bypassing safety measures and exposing limitations in AI’s ability to understand non-semantic text forms. This complements the narrative of digital fraud, as illustrated by a New Yorker article detailing a scam that uses AI to clone voices of loved ones for extortion: The Terrifying AI Scam That Uses Your Loved One’s Voice. We then look at the decreasing public trust in AI with Public trust in AI is sinking across the board), underscoring the importance ethical considerations.

Onto philosophy and ethics, Among the AI Doomsayers delves into the divided perceptions of AI’s future, from utopian visions to dystopian fears. Complementing this perspective, The Expanding Dark Forest and Generative AI discusses the challenge of maintaining human authenticity in an era increasingly dominated by AI-generated content, asking us to think about what it means to have genuine human interactions online.

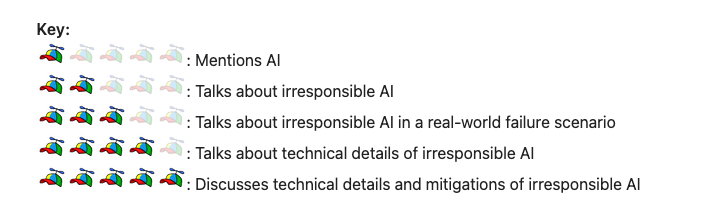

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

See the Slideshare version of the post or read on:

I finally figured out how to skip daily stand-ups: This tweet (Xeet?) describes someone creating an AI clone to participate in daily stand-up meetings on their behalf. This AI clone can understand daily activities, listen to meetings via audio processing, and respond using a language model.

I finally figured out how to skip daily stand-ups: This tweet (Xeet?) describes someone creating an AI clone to participate in daily stand-up meetings on their behalf. This AI clone can understand daily activities, listen to meetings via audio processing, and respond using a language model.

#AIAssistant #ProductivityHacks #VirtualMeetings #InnovationInWork #TechSolutions

LLM Prompt Injection Worm: Bruce Schneier discusses the development and demonstration of a worm that can spread through large language models (LLMs) by prompt injection, exploiting GenAI-powered applications to perform malicious activities without user interaction. It emphasises the potential risks in the interconnected ecosystems of Generative AI applications.

LLM Prompt Injection Worm: Bruce Schneier discusses the development and demonstration of a worm that can spread through large language models (LLMs) by prompt injection, exploiting GenAI-powered applications to perform malicious activities without user interaction. It emphasises the potential risks in the interconnected ecosystems of Generative AI applications.

#Cybersecurity #AIWorm #PromptInjection #LLM #GenAIThreats

ArtPrompt: ASCII Art-based Jailbreak Attacks against Aligned LLMs: This article introduces ArtPrompt, a novel ASCII art-based attack that exploits vulnerabilities in large language models (LLMs) by bypassing safety measures to induce undesired behaviours. This approach highlights the limitations of current LLMs in recognising non-semantic forms of text, such as ASCII art, posing significant security challenges. More on harmful ASCII-art here: ASCII art elicits harmful responses from 5 major AI chatbots.

ArtPrompt: ASCII Art-based Jailbreak Attacks against Aligned LLMs: This article introduces ArtPrompt, a novel ASCII art-based attack that exploits vulnerabilities in large language models (LLMs) by bypassing safety measures to induce undesired behaviours. This approach highlights the limitations of current LLMs in recognising non-semantic forms of text, such as ASCII art, posing significant security challenges. More on harmful ASCII-art here: ASCII art elicits harmful responses from 5 major AI chatbots.

#ArtPrompt #LLMSecurity #ASCIIArtAttack #CyberSecurity #AIChallenges

Among the AI Doomsayers: The article explores the divide within the tech community regarding the future of artificial intelligence, with some viewing it as a pathway to utopia and others fearing it could lead to humanity’s destruction. It delves into personal stories, industry dynamics, and philosophical debates surrounding AI’s potential impact, highlighting the complexity and urgency of navigating its development responsibly.

Among the AI Doomsayers: The article explores the divide within the tech community regarding the future of artificial intelligence, with some viewing it as a pathway to utopia and others fearing it could lead to humanity’s destruction. It delves into personal stories, industry dynamics, and philosophical debates surrounding AI’s potential impact, highlighting the complexity and urgency of navigating its development responsibly.

#AI #Technology #Future #Ethics #Innovation

The Terryifying AI Scam That Uses Your Loved One’s Voice: This article describes a new AI scam targeting individuals by mimicking the voices of their loved ones to create highly convincing and emotionally manipulative situations for extortion. It highlights the increasing sophistication of AI technologies in cloning voices and the challenges in combating such scams, alongside the emotional and psychological impact on victims. One thing worth considering is the creation of a “Family Safe Word” that can be asked for in a situation where the provenance of a caller is under question. Making sure everyone in the family remembers the safe word without having to write it down is the next challenge.

The Terryifying AI Scam That Uses Your Loved One’s Voice: This article describes a new AI scam targeting individuals by mimicking the voices of their loved ones to create highly convincing and emotionally manipulative situations for extortion. It highlights the increasing sophistication of AI technologies in cloning voices and the challenges in combating such scams, alongside the emotional and psychological impact on victims. One thing worth considering is the creation of a “Family Safe Word” that can be asked for in a situation where the provenance of a caller is under question. Making sure everyone in the family remembers the safe word without having to write it down is the next challenge.

#AIScams #VoiceCloning #DigitalFraud #CyberSecurity #TechEthics

Public trust in AI is sinking across the board: Public trust in AI and the companies developing it is declining worldwide, with significant drops in trust levels over the past five years, according to Edelman’s data. This trend raises concerns as the industry grows and regulators worldwide consider appropriate regulations.

Public trust in AI is sinking across the board: Public trust in AI and the companies developing it is declining worldwide, with significant drops in trust levels over the past five years, according to Edelman’s data. This trend raises concerns as the industry grows and regulators worldwide consider appropriate regulations.

#AITrust #TechEthics #PrivacyProtection #AIRegulation #DigitalInnovation

The Expanding Dark Forest and Generative AI: This article discusses the challenges of distinguishing human-generated content from AI-generated content in an increasingly digitised world. It explores strategies for proving human authenticity online amidst a flood of generative AI content, highlighting the importance of originality, critical thinking, and personal interactions to maintain the uniqueness of human contributions on the web.

The Expanding Dark Forest and Generative AI: This article discusses the challenges of distinguishing human-generated content from AI-generated content in an increasingly digitised world. It explores strategies for proving human authenticity online amidst a flood of generative AI content, highlighting the importance of originality, critical thinking, and personal interactions to maintain the uniqueness of human contributions on the web.

#AIDarkForest #GenerativeAI #DigitalIdentity #HumanAuthenticity #OnlineInteraction

Regards, M@

[ED: If you’d like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com. Also cross-published on Medium.

Photo by

Photo by