QFM044: Irresponsible AI Reading List November 2024

Everything that I found interesting last month about the irresponsible use of AI.

Tags: qfm, irresponsible, ai, reading, list, november, 2024

Source: Photo by Kind and Curious on Unsplash)

Source: Photo by Kind and Curious on Unsplash)

The November edition of the Irresponsible AI Reading List opens with Despite Its Impressive Output, Generative AI Doesn’t Have a Coherent Understanding of the World, MIT researchers highlight a core limitation of AI: a lack of true world understanding. While large language models (LLMs) can produce human-like responses, they falter when deeper comprehension or adaptability is required. This disconnect underscores AI’s dependency on static data, failing to meet the expectations of a tool that can truly “think.”

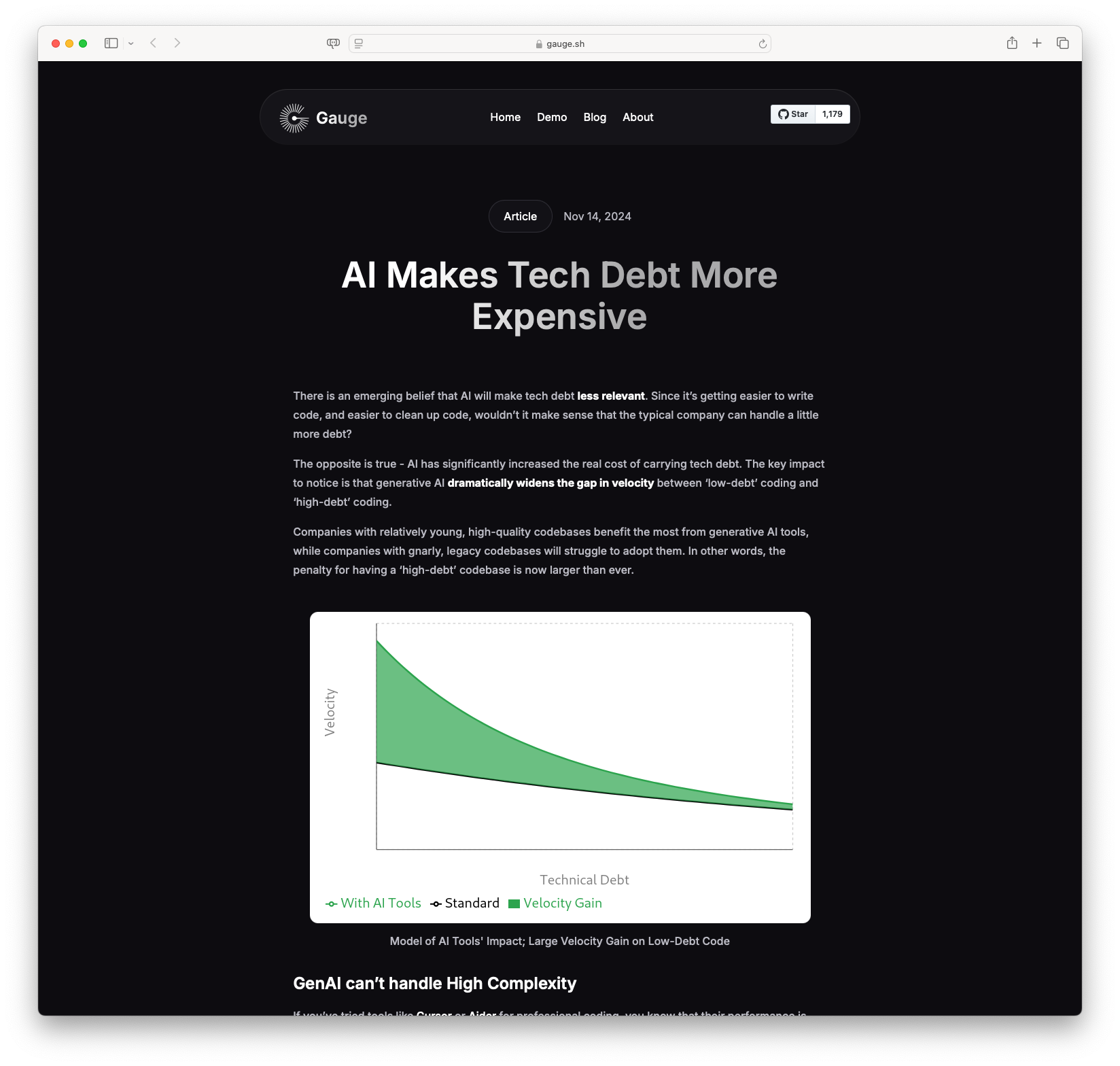

The theme of inflated expectations is echoed in YC is Wrong about LLMs for Chip Design, which critiques the belief that LLMs can revolutionise complex engineering fields like chip design. While AI tools may assist in reducing costs and aiding verification tasks, they fall short of replicating the nuanced expertise of skilled engineers. Similarly, AI Makes Tech Debt More Expensive reframes the assumption that AI alleviates development challenges, arguing that AI actually amplifies the cost of technical debt. Codebases burdened with legacy issues struggle to harness AI’s potential, highlighting the need for clean architecture to unlock its benefits.

As AI increasingly enters the workplace, the question of its societal impact grows sharper. How AI Could Break the Career Ladder addresses a troubling trend: the automation of entry-level jobs. These roles, often critical for skill-building and career progression, risk being replaced by AI systems, potentially creating industries with hollowed-out career pipelines. This concern is mirrored in The Tech Utopia Fantasy is Over, which critiques the long-held belief that technological advancement would deliver universally positive outcomes. Instead, the article examines how tech solutions often exacerbate societal inequalities and environmental concerns.

In creative fields, Anti-scale: a response to AI in journalism suggests that generative AI may undermine trust and authenticity in journalism. By producing plausible but potentially misleading content, AI highlights the need for human-driven, community-focused approaches to foster genuine engagement. This emphasis on human connection provides a counterpoint to the efficiency-focused narratives often driving AI adoption.

Finally, Manna – Two Views of Humanity’s Future – Chapter 1 offers a speculative lens on the societal implications of AI and automation, imagining a world where software micromanages labour to maximise efficiency. While fictional, the narrative forces readers to consider the trade-offs of such systems in terms of agency, equity, and humanity.

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

Despite Its Impressive Output, Generative AI Doesn’t Have a Coherent Understanding of the World: MIT researchers have found that even the best-performing large language models (LLMs) lack a coherent understanding of the world. These models, capable of impressive tasks like writing poetry and providing navigation, fail when conditions change or require a true model of the world to succeed.

Despite Its Impressive Output, Generative AI Doesn’t Have a Coherent Understanding of the World: MIT researchers have found that even the best-performing large language models (LLMs) lack a coherent understanding of the world. These models, capable of impressive tasks like writing poetry and providing navigation, fail when conditions change or require a true model of the world to succeed.

#AIResearch #MachineLearning #GenerativeAI #Technology #MIT

AI Makes Tech Debt More Expensive: The article discusses the misconception that AI will alleviate tech debt. It argues that the opposite is true, AI increases the cost of tech debt because it widens the velocity gap between low-debt and high-debt codebases. Companies with newer, cleaner codebases can leverage AI better, while those with legacy code struggle, thereby increasing the penalty for tech debt. The article suggests that refactoring codebases and using modular design are necessary to fully benefit from AI tools. It encourages developers to shift focus from code implementation to architecture to enable AI-driven rapid development.

AI Makes Tech Debt More Expensive: The article discusses the misconception that AI will alleviate tech debt. It argues that the opposite is true, AI increases the cost of tech debt because it widens the velocity gap between low-debt and high-debt codebases. Companies with newer, cleaner codebases can leverage AI better, while those with legacy code struggle, thereby increasing the penalty for tech debt. The article suggests that refactoring codebases and using modular design are necessary to fully benefit from AI tools. It encourages developers to shift focus from code implementation to architecture to enable AI-driven rapid development.

#AI #TechDebt #CodeQuality #GenerativeAI #SoftwareDevelopment

Anti-scale: a response to AI in journalism: In his article, Tyler Fisher argues against the adoption of generative AI in journalism, citing a lack of trust in current journalism practices and the potential for AI to exacerbate misinformation issues. Generative AI, according to Fisher, provides plausible but often inaccurate content that fails to solve the industry’s pressing issues. Instead, he advocates for an “anti-scale” approach that emphasizes human connection, community, and actual experiences over automation and scale, suggesting that AI-generated content cannot foster the trust and community engagement that journalism needs.

Anti-scale: a response to AI in journalism: In his article, Tyler Fisher argues against the adoption of generative AI in journalism, citing a lack of trust in current journalism practices and the potential for AI to exacerbate misinformation issues. Generative AI, according to Fisher, provides plausible but often inaccurate content that fails to solve the industry’s pressing issues. Instead, he advocates for an “anti-scale” approach that emphasizes human connection, community, and actual experiences over automation and scale, suggesting that AI-generated content cannot foster the trust and community engagement that journalism needs.

#Journalism #AI #TrustInMedia #CommunityEngagement #TechEthics

A Student’s Guide to Writing with ChatGPT: The article outlines how students can use ChatGPT to enhance their writing and critical thinking by automating tasks like citation formatting, generating ideas, providing feedback on structure, and engaging in dialogue to refine arguments, while emphasising transparency and academic integrity in its use. It discourages reliance on AI to replace the learning process and advocates for responsible and thoughtful application.

A Student’s Guide to Writing with ChatGPT: The article outlines how students can use ChatGPT to enhance their writing and critical thinking by automating tasks like citation formatting, generating ideas, providing feedback on structure, and engaging in dialogue to refine arguments, while emphasising transparency and academic integrity in its use. It discourages reliance on AI to replace the learning process and advocates for responsible and thoughtful application.

#ChatGPT #StudentWriting #CriticalThinking #AcademicIntegrity #AIinEducation

Manna – Two Views of Humanity’s Future – Chapter 1: Imagine the start of a robotic revolution not from major tech hubs like NASA or MIT, but from a Burger-G fast-food restaurant in North Carolina. This narrative explores the implementation of Manna, a sophisticated software acting as the manager, streamlining operations by micromanaging every employee in real-time. With its precise task assignment through headsets, Manna ensures efficiency that almost doubles workers’ performance and customer satisfaction, eventually leading to widespread adoption across various industries.

Manna – Two Views of Humanity’s Future – Chapter 1: Imagine the start of a robotic revolution not from major tech hubs like NASA or MIT, but from a Burger-G fast-food restaurant in North Carolina. This narrative explores the implementation of Manna, a sophisticated software acting as the manager, streamlining operations by micromanaging every employee in real-time. With its precise task assignment through headsets, Manna ensures efficiency that almost doubles workers’ performance and customer satisfaction, eventually leading to widespread adoption across various industries.

#Technology #Automation #FutureOfWork #Robotics #FastFood

How AI Could Break the Career Ladder: Generative AI risks automating entry-level jobs in professions like finance, law, and consulting, disrupting traditional on-the-job learning and apprenticeship models, and potentially reducing career opportunities for new graduates, particularly those from underrepresented groups. This shift may lead to industries staffed by senior managers overseeing AI systems, raising questions about skill development and career progression.

How AI Could Break the Career Ladder: Generative AI risks automating entry-level jobs in professions like finance, law, and consulting, disrupting traditional on-the-job learning and apprenticeship models, and potentially reducing career opportunities for new graduates, particularly those from underrepresented groups. This shift may lead to industries staffed by senior managers overseeing AI systems, raising questions about skill development and career progression.

#AI #Automation #Jobs #CareerLadder #FutureOfWork

YC is Wrong about LLMs for Chip Design: The article critiques Y Combinator’s proposal that large language models (LLMs) could revolutionize chip design, arguing instead that such AI tools are currently incapable of delivering the high-level, novel innovations that skilled engineers provide. YC’s belief in LLMs’ ability to significantly outpace human chip design capabilities is seen as flawed due to the subpar current performance of LLMs in generating advanced chip architecture. The author further suggests that while LLMs might reduce design costs and aid in verification tasks, they will not replace the nuances of human expertise, particularly in competitive, high-performance markets.

YC is Wrong about LLMs for Chip Design: The article critiques Y Combinator’s proposal that large language models (LLMs) could revolutionize chip design, arguing instead that such AI tools are currently incapable of delivering the high-level, novel innovations that skilled engineers provide. YC’s belief in LLMs’ ability to significantly outpace human chip design capabilities is seen as flawed due to the subpar current performance of LLMs in generating advanced chip architecture. The author further suggests that while LLMs might reduce design costs and aid in verification tasks, they will not replace the nuances of human expertise, particularly in competitive, high-performance markets.

#AI #ChipDesign #Innovation #TechCritique #YC

The Tech Utopia Fantasy is Over: The article explores the shift from an optimistic vision of technology’s potential to a more cynical perspective on its role in society. Initially, tech advancements promised a future of ease and interconnectedness, with media portraying a bright horizon filled with innovation and comfort. However, the reality has evolved into a complex interplay of benefits and overshadowing issues, such as misinformation, disinformation, and environmental concerns. The utopian dream of technology solving major global issues like poverty and discrimination is critiqued, pointing out the growing awareness of tech companies’ failure to deliver on these promises while contributing to new societal problems.

The Tech Utopia Fantasy is Over: The article explores the shift from an optimistic vision of technology’s potential to a more cynical perspective on its role in society. Initially, tech advancements promised a future of ease and interconnectedness, with media portraying a bright horizon filled with innovation and comfort. However, the reality has evolved into a complex interplay of benefits and overshadowing issues, such as misinformation, disinformation, and environmental concerns. The utopian dream of technology solving major global issues like poverty and discrimination is critiqued, pointing out the growing awareness of tech companies’ failure to deliver on these promises while contributing to new societal problems.

#TechUtopia #TechCritique #SocialImpact #FutureOfTech #InnovationChallenge

Regards,

[ED: If you’d like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com and cross-posted on Medium

hello@matthewsinclair.com |

matthewsinclair.com |

bsky.app/@matthewsinclair.com |

masto.ai/@matthewsinclair |

medium.com/@matthewsinclair |

xitter/@matthewsinclair