QFM061: Machine Intelligence Reading List April 2025

Everything that I found interesting last month about machine intelligence and AI technology.

Tags: qfm, machine, intelligence, ai, reading, list, april, 2025

Source: Photo by Mike Kononov on Unsplash

Source: Photo by Mike Kononov on Unsplash

This month’s Machine Intelligence Reading List begins with conversational interfaces under scrutiny. The Case Against Conversational Interfaces argues that whilst natural language interfaces appear intuitive, they prove slower and less efficient than traditional methods like keyboard shortcuts. This critique extends to broader questions about AI interface design, with AI + UX: design for intelligent interfaces outlining principles for explainability, error management, and multimodal interaction to enhance trust in AI-driven systems.

Agent reliability emerges as a central concern, challenging the focus on capability. AI Agents: Less Capability, More Reliability, Please advocates for prioritising trustworthy AI systems over impressive ones, whilst The Agency, Control, Reliability (ACR) Tradeoff for Agents demonstrates through experiments that restricting agent autonomy can enhance performance reliability. This connects to infrastructure developments like Announcing the Agent2Agent Protocol (A2A), which provides standardised methods for AI agents to collaborate across platforms, and Beyond APIs: Software Interfaces in the Agent Era, which explores self-describing, goal-oriented interfaces for dynamic agent interactions.

Future scenarios receive substantial attention, from speculative to practical. AI 2027: Envisioning AI’s Future by 2027 presents concrete narratives about superhuman AI impacts, whilst AI masters Minecraft: DeepMind program finds diamonds without being taught demonstrates current AI achievements through Dreamer’s reinforcement learning approach. Practical applications appear in Building an AI That Watches Rugby and Turn a Codebase into Easy Tutorial with AI, showing AI’s expanding role in domain-specific analysis and educational content generation.

Economic impacts receive measured analysis. Generative AI is not replacing jobs or hurting wages at all, economists claim reports research among 25,000 Danish workers showing minimal economic effects despite widespread AI adoption. This measured perspective aligns with AI as Normal Technology, which frames AI as a tool requiring gradual integration rather than transformative disruption, and The Coming Knowledge-Work Supply-Chain Crisis, which identifies human judgement as the bottleneck as AI accelerates output production.

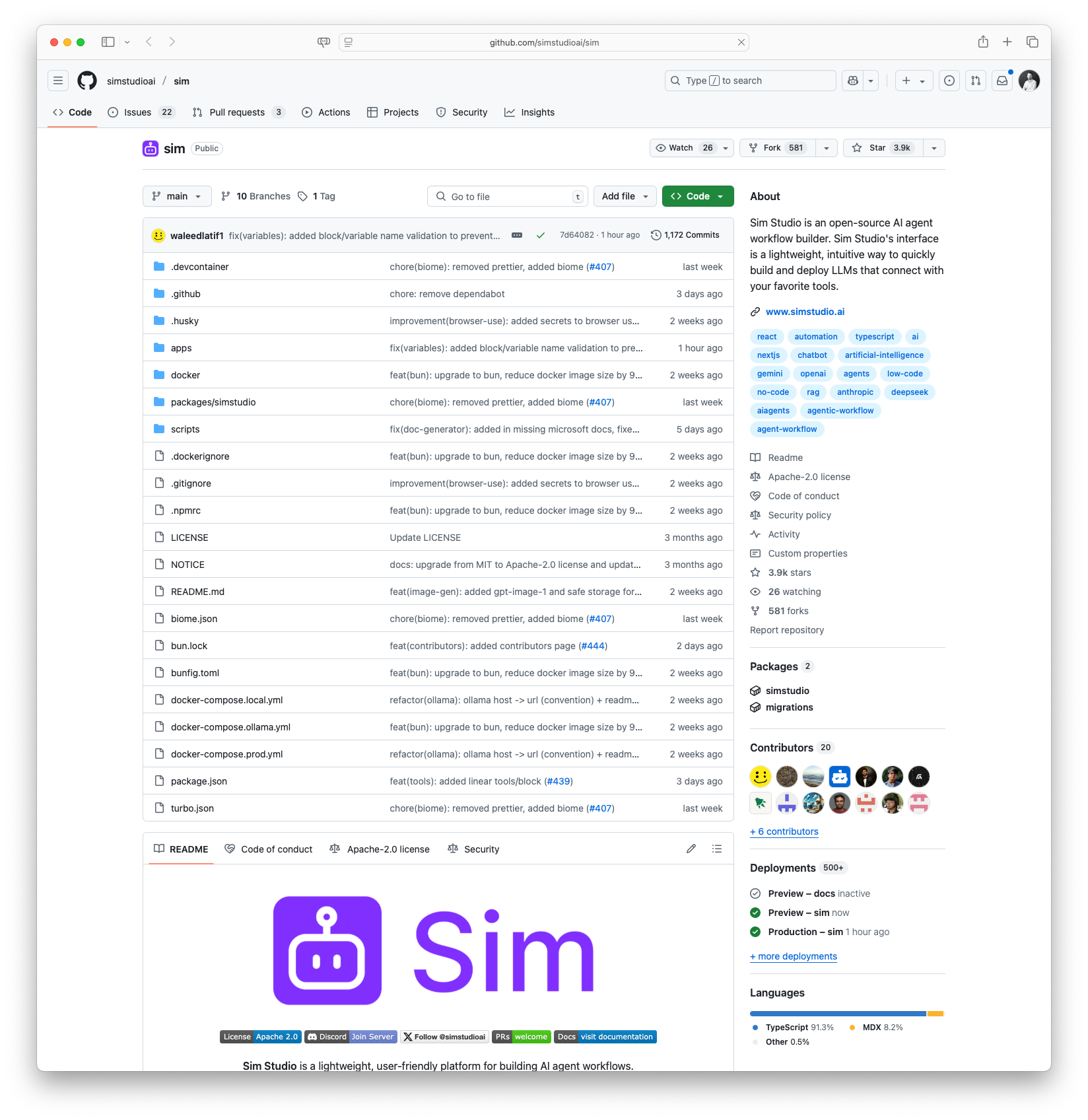

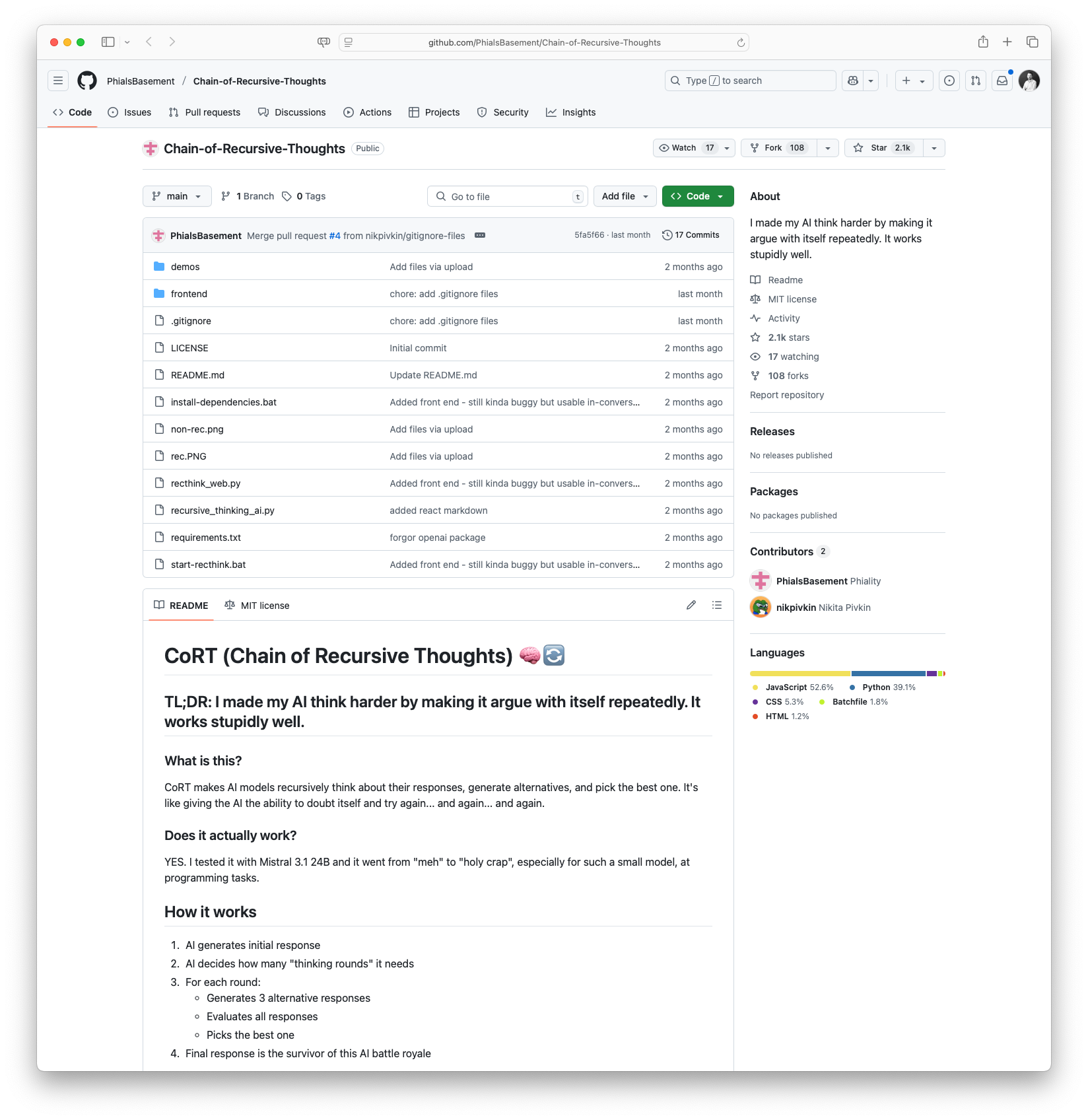

Programming and development tools evolve in multiple directions. Why LLM-Powered Programming is More Mech Suit Than Artificial Human positions AI tools as capability amplifiers requiring human oversight, whilst The Problem with “Vibe Coding” distinguishes between personal coding and product development requirements. Practical frameworks emerge through LLMs - A Ghost in the Machine, Sim Studio’s visual workflow builder, and Chain‑of‑Recursive‑Thoughts, which enables AI models to generate multiple alternatives and recursively refine outputs.

Theoretical foundations receive examination through educational resources and research insights. The StatQuest Illustrated Guide To Machine Learning and Understanding Machine Learning: From Theory to Algorithms provide accessible explanations of fundamental concepts, whilst Understanding the Basics of a Minimal HTML Structure offers structured video lectures on neural networks. Market dynamics appear in Token Arbitrage, which highlights pricing inefficiencies in foundation model tokens, and MCPs, Gatekeepers, and the Future of AI, explaining how Model Context Protocols will transform chatbots into active agents.

Development perspectives conclude with The skill of the future is not ‘AI’, but ‘Focus’, emphasising careful review of AI outputs over blind acceptance, and Your phone isn’t secretly listening to you, but the truth is more disturbing, which debunks listening myths whilst highlighting sophisticated behavioural data collection methods.

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

The Case Against Conversational Interfaces: The article critiques the periodic excitement surrounding conversational interfaces, such as virtual assistants and large language models, as the future of computing. It argues that while natural language interfaces are perceived as intuitive, they are often slower and less efficient than traditional methods, such as keyboard shortcuts and graphical user interfaces. Despite advancements in AI, the author suggests that conversational UIs should complement, not replace, existing paradigms to enhance productivity without compromising on speed and convenience.

The Case Against Conversational Interfaces: The article critiques the periodic excitement surrounding conversational interfaces, such as virtual assistants and large language models, as the future of computing. It argues that while natural language interfaces are perceived as intuitive, they are often slower and less efficient than traditional methods, such as keyboard shortcuts and graphical user interfaces. Despite advancements in AI, the author suggests that conversational UIs should complement, not replace, existing paradigms to enhance productivity without compromising on speed and convenience.

#ConversationalInterfaces #AI #NaturalLanguage #HCI #Productivity

AI 2027: Envisioning AI’s Future by 2027: The document ‘AI 2027’ presents scenarios predicting the impact of superhuman AI, suggesting it may exceed the effects of the Industrial Revolution. This speculative forecast is informed by trend extrapolations, expert feedback, and simulation exercises, envisioning AI’s role over the next years leading to 2027. The team, including prominent AI researchers like Daniel Kokotajlo and Scott Alexander, offers concrete narratives to encourage discussion about AI’s potential futures and invites alternative viewpoints with incentives for productive scenarios.

AI 2027: Envisioning AI’s Future by 2027: The document ‘AI 2027’ presents scenarios predicting the impact of superhuman AI, suggesting it may exceed the effects of the Industrial Revolution. This speculative forecast is informed by trend extrapolations, expert feedback, and simulation exercises, envisioning AI’s role over the next years leading to 2027. The team, including prominent AI researchers like Daniel Kokotajlo and Scott Alexander, offers concrete narratives to encourage discussion about AI’s potential futures and invites alternative viewpoints with incentives for productive scenarios.

#AI2027 #Futurism #TechRevolution #SuperhumanAI #AIImpact

AI masters Minecraft: DeepMind program finds diamonds without being taught: DeepMind’s AI, Dreamer, has successfully navigated the complex tasks of collecting diamonds in Minecraft without human guidance. This achievement marks a significant step towards developing AI systems that can generalize learning across different domains. Unlike previous AIs which relied on video demonstrations, Dreamer employs a trial-and-error method known as reinforcement learning, building a model of its environment to imagine future scenarios and guide decisions.

AI masters Minecraft: DeepMind program finds diamonds without being taught: DeepMind’s AI, Dreamer, has successfully navigated the complex tasks of collecting diamonds in Minecraft without human guidance. This achievement marks a significant step towards developing AI systems that can generalize learning across different domains. Unlike previous AIs which relied on video demonstrations, Dreamer employs a trial-and-error method known as reinforcement learning, building a model of its environment to imagine future scenarios and guide decisions.

#AI #DeepMind #Minecraft #ReinforcementLearning #Technology

AI Agents: Less Capability, More Reliability, Please: The article ‘AI Agents: Less Capability, More Reliability, Please’ discusses the need for AI agents to prioritize reliability over increased capability. The emphasis is on creating AI systems that users can trust, even if it means they are less capable in some aspects. The author argues that by focusing on reliability, developers can build AI agents that are more effective and beneficial in the long term.

AI Agents: Less Capability, More Reliability, Please: The article ‘AI Agents: Less Capability, More Reliability, Please’ discusses the need for AI agents to prioritize reliability over increased capability. The emphasis is on creating AI systems that users can trust, even if it means they are less capable in some aspects. The author argues that by focusing on reliability, developers can build AI agents that are more effective and beneficial in the long term.

#AI #Technology #Reliability #Innovation #Future

Beyond APIs: Software Interfaces in the Agent Era: The article explores the evolution of software interfaces from traditional APIs to more adaptive, agent-oriented mechanisms. It argues that while APIs have served well for structured and predictable interactions, they fall short in dynamic environments where AI agents operate. These agents require flexible, real-time interfacing methods that go beyond static API contracts to achieve efficient functioning.

Beyond APIs: Software Interfaces in the Agent Era: The article explores the evolution of software interfaces from traditional APIs to more adaptive, agent-oriented mechanisms. It argues that while APIs have served well for structured and predictable interactions, they fall short in dynamic environments where AI agents operate. These agents require flexible, real-time interfacing methods that go beyond static API contracts to achieve efficient functioning.

To address this, the author advocates for self-describing, goal-oriented interfaces that allow agents to discover capabilities and interact dynamically without predefined steps. The discussion includes prospective models such as LLM-assisted tools and dynamically discoverable interfaces, emphasizing a shift towards more intelligent and adaptable software interactions.

#AI #SoftwareDevelopment #APIs #PortiaAI #TechInnovations

The StatQuest Illustrated Guide To Machine Learning: Josh Starmer’s ‘The StatQuest Illustrated Guide To Machine Learning’ is designed to make machine learning accessible and less intimidating by breaking down complex algorithms into simple, digestible explanations. The book provides intuitive visualizations to enhance understanding, making it ideal for beginners who wish to build a conceptual foundation beyond mere equations without oversimplifying the subject matter.

The StatQuest Illustrated Guide To Machine Learning: Josh Starmer’s ‘The StatQuest Illustrated Guide To Machine Learning’ is designed to make machine learning accessible and less intimidating by breaking down complex algorithms into simple, digestible explanations. The book provides intuitive visualizations to enhance understanding, making it ideal for beginners who wish to build a conceptual foundation beyond mere equations without oversimplifying the subject matter.

#MachineLearning #AI #DataScience #JoshStarmer #Education

Announcing the Agent2Agent Protocol (A2A): The newly launched Agent2Agent (A2A) protocol is set to revolutionize the way AI agents collaborate across different platforms and systems. This open protocol facilitates seamless communication among AI agents built by different vendors, enabling them to handle tasks more autonomously and efficiently. Supported by over 50 technology partners like Atlassian, Box, and SAP, the A2A protocol aims to enhance productivity in various enterprise applications by ensuring a standardized method of interoperability. This initiative marks a significant step towards achieving a collaborative AI ecosystem that can solve complex problems and drive innovation in business operations.

Announcing the Agent2Agent Protocol (A2A): The newly launched Agent2Agent (A2A) protocol is set to revolutionize the way AI agents collaborate across different platforms and systems. This open protocol facilitates seamless communication among AI agents built by different vendors, enabling them to handle tasks more autonomously and efficiently. Supported by over 50 technology partners like Atlassian, Box, and SAP, the A2A protocol aims to enhance productivity in various enterprise applications by ensuring a standardized method of interoperability. This initiative marks a significant step towards achieving a collaborative AI ecosystem that can solve complex problems and drive innovation in business operations.

#AI #AgentInteroperability #A2AProtocol #EnterpriseTech #Innovation

Turn a Codebase into Easy Tutorial with AI: This project uses a concise, 100-line PocketFlow LLM framework to crawl GitHub repositories or local code directories, build a knowledge base, and generate beginner-friendly tutorials that explain code structure, abstractions, and interactions with clear visualisations. It helps users understand complex codebases quickly without prior experience.

Turn a Codebase into Easy Tutorial with AI: This project uses a concise, 100-line PocketFlow LLM framework to crawl GitHub repositories or local code directories, build a knowledge base, and generate beginner-friendly tutorials that explain code structure, abstractions, and interactions with clear visualisations. It helps users understand complex codebases quickly without prior experience.

#AI #CodeTutorial #PocketFlow #LLM #BeginnerFriendly

LLMs - A Ghost in the Machine: The article explores the integration of Large Language Models (LLMs) into existing systems, offering a guide on identifying use cases and selecting the right model. It emphasizes the benefits of combining LLMs with traditional system architectures to create adaptable, language-driven systems. The piece also discusses data cleanup and enrichment using LLMs and explores prompt templating for seamless integration. Additionally, it highlights the potential for LLMs to adapt to varied problem sets, enhancing system functionality.

LLMs - A Ghost in the Machine: The article explores the integration of Large Language Models (LLMs) into existing systems, offering a guide on identifying use cases and selecting the right model. It emphasizes the benefits of combining LLMs with traditional system architectures to create adaptable, language-driven systems. The piece also discusses data cleanup and enrichment using LLMs and explores prompt templating for seamless integration. Additionally, it highlights the potential for LLMs to adapt to varied problem sets, enhancing system functionality.

#LLM #MachineLearning #AI #SystemIntegration #DataScience

The Agency, Control, Reliability (ACR) Tradeoff for Agents: The article delves into developing AI agents capable of high-agency tasks with the added need for reliability and consistency. Through experiments, it shows a trade-off between agent autonomy and performance reliability. The “Give Fin a Task” approach proposes a system that slightly restricts the agent’s autonomy to enhance performance, suggesting that lowering agency might increase reliability in achieving task success. This method benefits customer support by improving reliability in handling complex tasks and achieving better customer satisfaction.

The Agency, Control, Reliability (ACR) Tradeoff for Agents: The article delves into developing AI agents capable of high-agency tasks with the added need for reliability and consistency. Through experiments, it shows a trade-off between agent autonomy and performance reliability. The “Give Fin a Task” approach proposes a system that slightly restricts the agent’s autonomy to enhance performance, suggesting that lowering agency might increase reliability in achieving task success. This method benefits customer support by improving reliability in handling complex tasks and achieving better customer satisfaction.

#AI #MachineLearning #CustomerSupport #Automation #Reliability

Understanding Machine Learning: From Theory to Algorithms: The book Understanding Machine Learning: From Theory to Algorithms introduces foundational principles of machine learning—formalising concepts like PAC learning, generalisation and convexity—then develops practical algorithms such as stochastic gradient descent, neural networks and structured output learning while addressing computational complexity and emerging theoretical ideas. It targets advanced undergraduates and beginning graduate students in computer science, maths, engineering or statistics.

Understanding Machine Learning: From Theory to Algorithms: The book Understanding Machine Learning: From Theory to Algorithms introduces foundational principles of machine learning—formalising concepts like PAC learning, generalisation and convexity—then develops practical algorithms such as stochastic gradient descent, neural networks and structured output learning while addressing computational complexity and emerging theoretical ideas. It targets advanced undergraduates and beginning graduate students in computer science, maths, engineering or statistics.

#MachineLearning #MLTheory #Algorithms #PACLearning #GraduateText

Understanding the Basics of a Minimal HTML Structure: The Understanding Deep Learning book website offers a structured set of video lectures covering theoretical and practical aspects of neural networks across 12 chapters, supplemented by recent blog content on topics like Gaussian processes, authored by Tamer Elsayed at Qatar University.

Understanding the Basics of a Minimal HTML Structure: The Understanding Deep Learning book website offers a structured set of video lectures covering theoretical and practical aspects of neural networks across 12 chapters, supplemented by recent blog content on topics like Gaussian processes, authored by Tamer Elsayed at Qatar University.

#DeepLearning #NeuralNetworks #GaussianProcesses #MLLectures #AIEducation

The Problem with “Vibe Coding”: In “The Problem with Vibe Coding” by Dylan Beattie, the distinction between coding and product development is explored. Beattie highlights how many in tech conflate ‘works on my machine’ code, often thrown together for personal tasks, with viable products that require a robust development process involving internationalization, concurrency, and more. The article underscores the importance of understanding these differences even as tools like Copilot empower more people to code.

The Problem with “Vibe Coding”: In “The Problem with Vibe Coding” by Dylan Beattie, the distinction between coding and product development is explored. Beattie highlights how many in tech conflate ‘works on my machine’ code, often thrown together for personal tasks, with viable products that require a robust development process involving internationalization, concurrency, and more. The article underscores the importance of understanding these differences even as tools like Copilot empower more people to code.

#Coding #ProductDevelopment #TechInsights #Programming #VibeCoding

Generative AI is not replacing jobs or hurting wages at all, economists claim: A new study by economists suggests that despite widespread concern, generative AI, including tools like ChatGPT, has not significantly impacted wages or job availability. Research conducted among 25,000 workers in Denmark across 11 occupations found that AI has yet created new economic benefits. While AI chatbots are increasingly popular and people are encouraged to use them, the expected productivity and economic outcomes have not materialized significantly. The study highlights that even though firms are investing heavily in AI infrastructure, the expected economic transformation has not been substantial, with only minimal time savings reported by users.

Generative AI is not replacing jobs or hurting wages at all, economists claim: A new study by economists suggests that despite widespread concern, generative AI, including tools like ChatGPT, has not significantly impacted wages or job availability. Research conducted among 25,000 workers in Denmark across 11 occupations found that AI has yet created new economic benefits. While AI chatbots are increasingly popular and people are encouraged to use them, the expected productivity and economic outcomes have not materialized significantly. The study highlights that even though firms are investing heavily in AI infrastructure, the expected economic transformation has not been substantial, with only minimal time savings reported by users.

#GenerativeAI #Economy #Jobs #ArtificialIntelligence #Technology

Building an AI That Watches Rugby: The article explores the development of an AI system that observes rugby matches to provide a richer commentary experience by analyzing non-eventful data, such as referee audio and subtle gameplay tactics. It explains how the AI extracts essential game data, like the score and game clock, using image recognition and audio transcription tools. Challenges like high API costs for vision models and the potential of integrating OCR tools are discussed. The article highlights the broader implications for AI in sports, emphasizing the potential to deliver deeper insights and storytelling that go beyond traditional stats.

Building an AI That Watches Rugby: The article explores the development of an AI system that observes rugby matches to provide a richer commentary experience by analyzing non-eventful data, such as referee audio and subtle gameplay tactics. It explains how the AI extracts essential game data, like the score and game clock, using image recognition and audio transcription tools. Challenges like high API costs for vision models and the potential of integrating OCR tools are discussed. The article highlights the broader implications for AI in sports, emphasizing the potential to deliver deeper insights and storytelling that go beyond traditional stats.

#AI #Rugby #SportsTech #DataScience #Innovation

Why LLM-Powered Programming is More Mech Suit Than Artificial Human: This article explores how LLM-powered programming tools like Claude Code amplify, rather than replace, a developer’s capabilities. The author draws a parallel to Ripley’s Power Loader in the movie ‘Aliens’, highlighting that these tools function as amplifiers to enhance what human programmers can achieve, much like an exoskeleton. However, the piece warns that while AI tools accelerate code generation, they also require constant human vigilance to avoid missteps, as they may make decisions that don’t align with overall project goals. The author emphasizes the importance of architectural thinking, pattern recognition, and technical judgment over raw coding ability, drawing on years of experience to ensure successful outcomes.

Why LLM-Powered Programming is More Mech Suit Than Artificial Human: This article explores how LLM-powered programming tools like Claude Code amplify, rather than replace, a developer’s capabilities. The author draws a parallel to Ripley’s Power Loader in the movie ‘Aliens’, highlighting that these tools function as amplifiers to enhance what human programmers can achieve, much like an exoskeleton. However, the piece warns that while AI tools accelerate code generation, they also require constant human vigilance to avoid missteps, as they may make decisions that don’t align with overall project goals. The author emphasizes the importance of architectural thinking, pattern recognition, and technical judgment over raw coding ability, drawing on years of experience to ensure successful outcomes.

#AI #Programming #MechSuit #LLM #TechInsights

Your phone isn’t secretly listening to you, but the truth is more disturbing: This article delves into the persistent conspiracy theory that smartphones listen to private conversations to target advertisements, a notion often reported to users who receive eerily relevant ads. Despite high-profile alleged exposes, such as Cox Media Group’s ‘Active Listening’, tech giants like Facebook, Google, and Apple have consistently denied these claims, suggesting rather complex and less direct methods are at play. The article points to a 2019 study by Wandera which debunked the myth of continuous listening by showcasing no detectable changes in data or battery use in phones subjected to controlled sound environments, revealing a more nuanced approach to targeted advertising involving collected behavioral data.

Your phone isn’t secretly listening to you, but the truth is more disturbing: This article delves into the persistent conspiracy theory that smartphones listen to private conversations to target advertisements, a notion often reported to users who receive eerily relevant ads. Despite high-profile alleged exposes, such as Cox Media Group’s ‘Active Listening’, tech giants like Facebook, Google, and Apple have consistently denied these claims, suggesting rather complex and less direct methods are at play. The article points to a 2019 study by Wandera which debunked the myth of continuous listening by showcasing no detectable changes in data or battery use in phones subjected to controlled sound environments, revealing a more nuanced approach to targeted advertising involving collected behavioral data.

#Privacy #Smartphones #Surveillance #Advertising #TechDebunked

AI as Normal Technology: The article by Arvind Narayanan and Sayash Kapoor presents a perspective of AI as “normal technology” rather than a potential superintelligence. It argues against the view of AI as a separate intelligent entity, instead proposing that AI should be seen as a tool that humans can remain in control of. The authors explore this concept through several parts, discussing how AI adoption, diffusion, and application development happen at different paces, emphasizing the slow and deliberate process required for transformative technologies to integrate into society. The authors predict that AI’s societal impact will be gradual and controlled, with a focus on continuity and adaptation rather than drastic policy interventions or the need for breakthroughs.

AI as Normal Technology: The article by Arvind Narayanan and Sayash Kapoor presents a perspective of AI as “normal technology” rather than a potential superintelligence. It argues against the view of AI as a separate intelligent entity, instead proposing that AI should be seen as a tool that humans can remain in control of. The authors explore this concept through several parts, discussing how AI adoption, diffusion, and application development happen at different paces, emphasizing the slow and deliberate process required for transformative technologies to integrate into society. The authors predict that AI’s societal impact will be gradual and controlled, with a focus on continuity and adaptation rather than drastic policy interventions or the need for breakthroughs.

#AI #Technology #Innovation #Democracy #FutureTech

MCPs, Gatekeepers, and the Future of AI: Charlie Graham explains that Model Context Protocols (MCPs) will evolve today’s passive chatbots into active AI agents capable of performing tasks, with gatekeepers ensuring responsible deployment.

MCPs, Gatekeepers, and the Future of AI: Charlie Graham explains that Model Context Protocols (MCPs) will evolve today’s passive chatbots into active AI agents capable of performing tasks, with gatekeepers ensuring responsible deployment.

#AI #Agents #MCPs #ResponsibleAI #Innovation

The Coming Knowledge-Work Supply-Chain Crisis: Scott Werner argues that AI is accelerating output production in knowledge work, but organisational decision-making processes have not kept pace, making human judgement the new bottleneck as review and oversight struggle to keep up.

The Coming Knowledge-Work Supply-Chain Crisis: Scott Werner argues that AI is accelerating output production in knowledge work, but organisational decision-making processes have not kept pace, making human judgement the new bottleneck as review and oversight struggle to keep up.

#KnowledgeWork #AIAgents #HumanJudgement #Productivity #SupplyChainCrisis

Token Arbitrage: Jack Arenas highlights that foundation model tokens expose a significant pricing inefficiency, as providers like OpenAI and Anthropic charge the same rate for tokens regardless of use case, enabling savvy players to profit from arbitrage akin to early cloud infrastructure strategies.

Token Arbitrage: Jack Arenas highlights that foundation model tokens expose a significant pricing inefficiency, as providers like OpenAI and Anthropic charge the same rate for tokens regardless of use case, enabling savvy players to profit from arbitrage akin to early cloud infrastructure strategies.

#TokenArbitrage #AIEconomics #FoundationModels #MarketInefficiency #AICommodities

Sim Studio AI: Sim Studio offers a drag‑and‑drop, Figma‑style interface to build, test and deploy multi‑agent LLM workflows that connect with tools like Slack, Gmail, Pinecone and GitHub, while providing observability, branching logic, looping, simulation and rapid iteration—all in a fully open‑source, Apache‑licensed environment.

Sim Studio AI: Sim Studio offers a drag‑and‑drop, Figma‑style interface to build, test and deploy multi‑agent LLM workflows that connect with tools like Slack, Gmail, Pinecone and GitHub, while providing observability, branching logic, looping, simulation and rapid iteration—all in a fully open‑source, Apache‑licensed environment.

#AIAgents #WorkflowBuilder #OpenSource #DragAndDrop #AgentObservability

Chain‑of‑Recursive‑Thoughts (CoRT): The Chain‑of‑Recursive‑Thoughts (CoRT) framework enhances AI reasoning by having a model generate an initial response, create multiple alternatives, assess them, and recursively refine its output until the best one emerges—drastically improving performance on complex tasks even with smaller models. Reddit users report CoRT yields “stupidly well” results and liken it to enabling the AI to “argue with itself repeatedly”.

Chain‑of‑Recursive‑Thoughts (CoRT): The Chain‑of‑Recursive‑Thoughts (CoRT) framework enhances AI reasoning by having a model generate an initial response, create multiple alternatives, assess them, and recursively refine its output until the best one emerges—drastically improving performance on complex tasks even with smaller models. Reddit users report CoRT yields “stupidly well” results and liken it to enabling the AI to “argue with itself repeatedly”.

#AIReasoning #RecursiveThoughts #LLMImprovement #SelfCritique #OpenSource

The skill of the future is not ‘AI’, but ‘Focus’: The article argues that while AI and Large Language Models (LLMs) are powerful tools for engineers, their outputs should be reviewed carefully due to inherent biases and inconsistencies. The author emphasizes the importance of focusing on the reasoning behind LLM-generated solutions instead of accepting them blindly, as relying too heavily on these models can lead to atrophy in engineers’ problem-solving skills. Ultimately, the skill of focus is highlighted as crucial for handling complex challenges in the future.

The skill of the future is not ‘AI’, but ‘Focus’: The article argues that while AI and Large Language Models (LLMs) are powerful tools for engineers, their outputs should be reviewed carefully due to inherent biases and inconsistencies. The author emphasizes the importance of focusing on the reasoning behind LLM-generated solutions instead of accepting them blindly, as relying too heavily on these models can lead to atrophy in engineers’ problem-solving skills. Ultimately, the skill of focus is highlighted as crucial for handling complex challenges in the future.

#AI #Focus #ProblemSolving #Engineers #LLMs

Regards,

M@

[ED: If you’d like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com and cross-posted on Medium.

hello@matthewsinclair.com | matthewsinclair.com | bsky.app/@matthewsinclair.com | masto.ai/@matthewsinclair | medium.com/@matthewsinclair | xitter/@matthewsinclair |