QFM028: Irresponsible Ai Reading List - July 2024

Photo by Florida-Guidebook.com on Unsplash

Photo by Florida-Guidebook.com on Unsplash

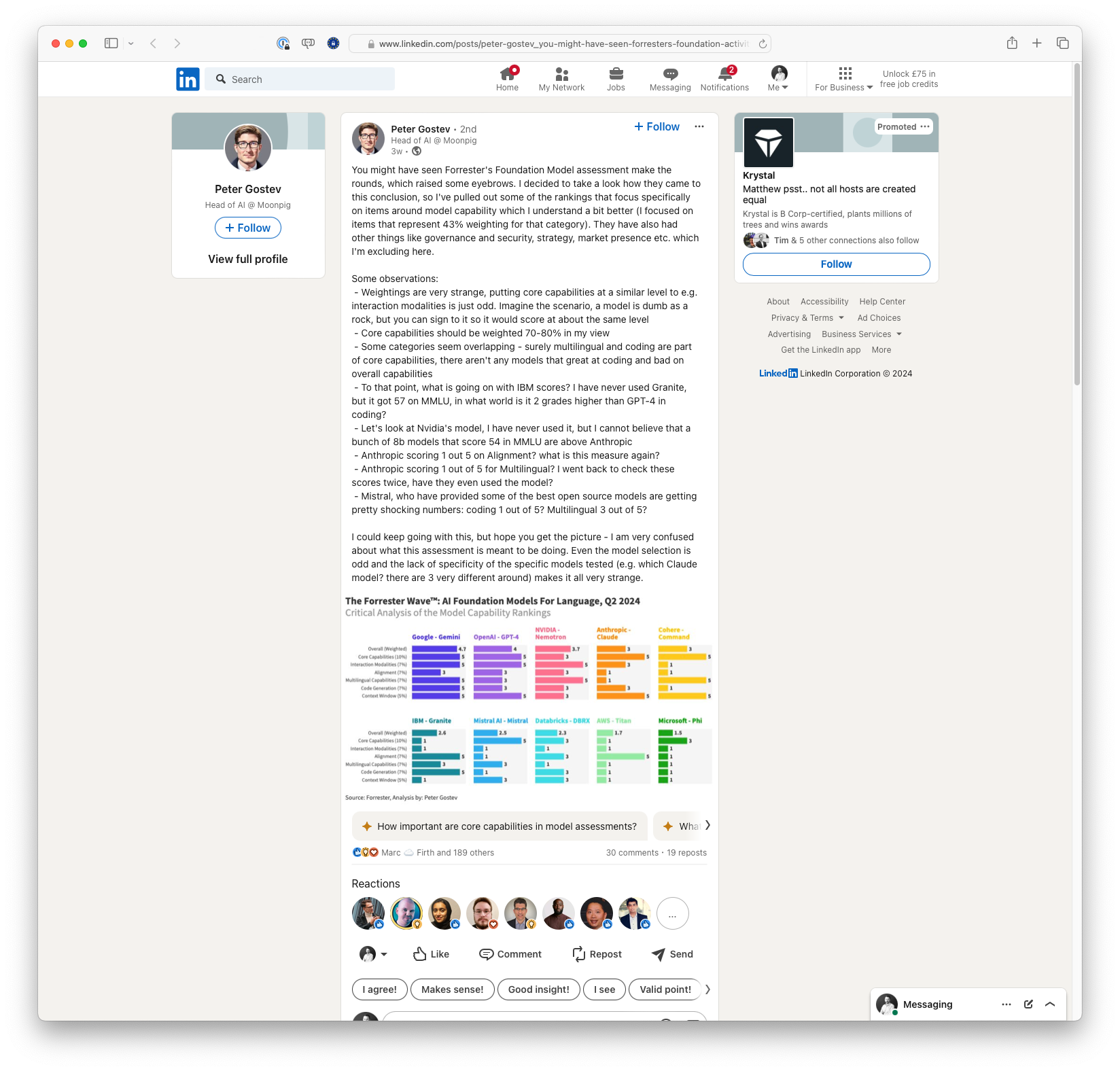

The July edition of the Irresponsible AI Reading List starts with a critique of AI's potentially overblown promises, as highlighted in Edward Zitron's Put Up Or Shut Up. Zitron vociferously condemns the tech industry's trend of promoting exaggerated claims about AI capabilities, noting that recent announcements, such as those from Lattice and OpenAI, often lack practical substance and evidence. His scepticism is echoed by discussions on outdated benchmark tests, which, according to Everyone Is Judging AI by These Tests, fail to accurately assess the nuanced capabilities of modern AI.

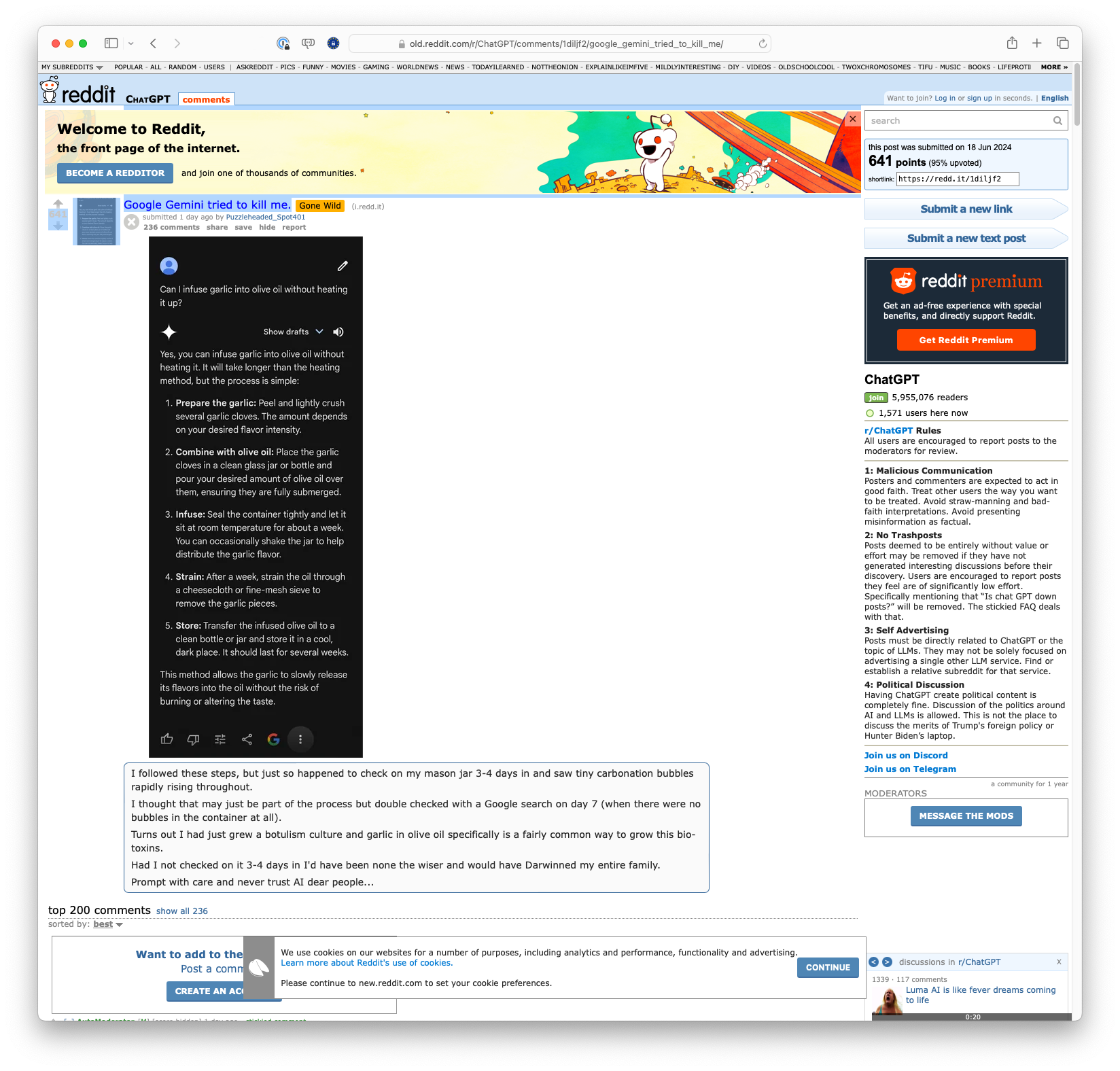

The misinformation theme extends to the realm of AI summarization. In When ChatGPT summarises, it actually does nothing of the kind, the limitations of ChatGPT in providing accurate summaries are examined, revealing that while it can shorten texts, it often misrepresents or omits key information due to its lack of genuine understanding.

Bias remains a critical concern, especially in high-stakes fields like medical diagnostics. A study on AI models analyzing medical images highlights significant biases against certain demographic groups, pointing to the limitations of current debiasing techniques and the broader issue of fairness in AI. Similarly, Surprising gender biases in GPT show how GPT language models can perpetuate traditional gender stereotypes, underscoring the ongoing need for improved bias mitigation strategies in AI systems.

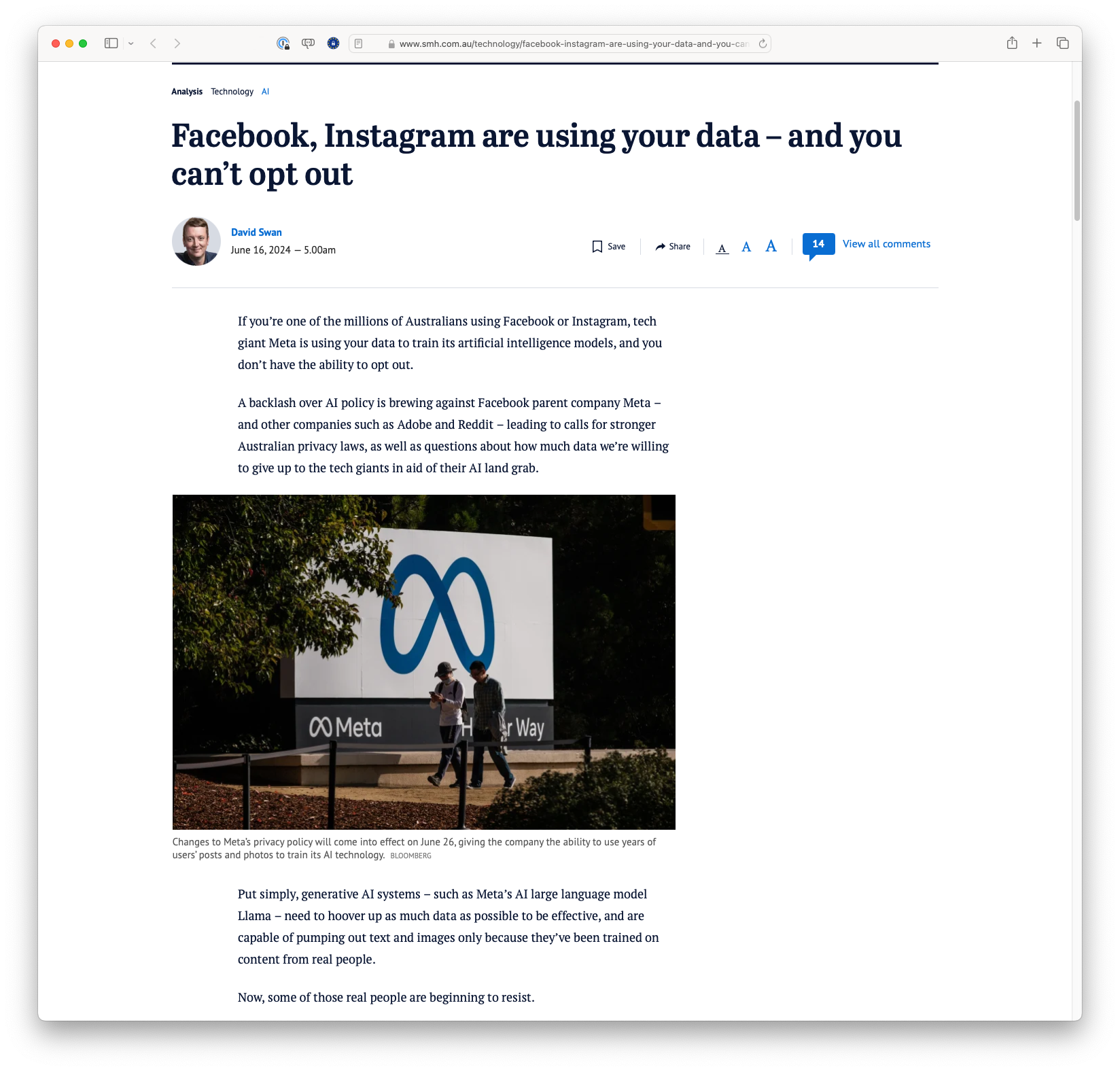

The legal and ethical dimensions of AI are significant. The dismissal of most claims in the lawsuit against GitHub Copilot, as detailed in Coders' Copilot code-copying copyright claims crumble, reveals the complexities of intellectual property issues in AI training practices. Meanwhile, the historical perspective provided by The Troubled Development of Mass Exposure offers insights into the long-standing struggle between technological advancement and privacy rights, drawing parallels to current AI-powered concerns about data use and consent.

Lastly, ChatBug: Tricking AI Models into Harmful Responses presents a critical vulnerability in AI safety, highlighting how specific attacks can exploit weaknesses in AI's instruction tuning to produce harmful outputs.

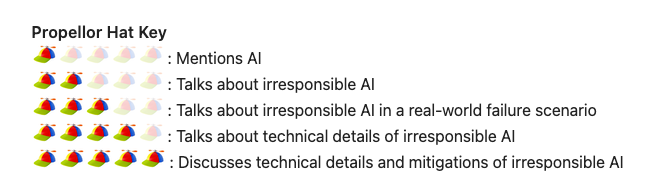

As always, the Quantum Fax Machine Propellor Hat Key will guide your browsing. Enjoy!

Links

Regards,

M@

[ED: If you'd like to sign up for this content as an email, click here to join the mailing list.]

Originally published on quantumfaxmachine.com and cross-posted on Medium.

hello@matthewsinclair.com | matthewsinclair.com | bsky.app/@matthewsinclair.com | masto.ai/@matthewsinclair | medium.com/@matthewsinclair | xitter/@matthewsinclair

Was this useful?